The risk of pasting confidential company data into ChatGPT

Experts

warn

that

employees

are

providing

sensitive

corporate

data

to

the

popular

artificial

intelligence

chatbot

model

ChatGPT.

Researchers

from

Cyberhaven

Labs

analyzed

the

use

of

ChatGPT

by

1.6

million

workers

at

companies

across

industries.

They

reported

that

5.6%

of

them

have

used

it

in

the

workplace

and

4.9%

have

provided

company

data

to

the

popular

chatbot

model

since

it

launched.

ChatGPT

uses

this

data

to

build

its

knowledge

base,

but

it

publicly

shares

information

built

on

it.

The

use

of

ChatGPT

is

becoming

a

serious

problem

in

the

workspace,

it

can

potentially

cause

the

leak

of

sensitive

and

confidential

data.

For

this

reason,

companies

like

JP

Morgan

and

Verizon

are

blocking

access

to

the

chatbot

over

concerns

about

confidential

data.

The

experts

reported

that

less

than

one

percent

(0.9%)

of

employees

is

responsible

for

80%

of

leaks

caused

by

pasting

company

data

into

the

chatbot.

This

percentage

could

rapidly

increase

in

the

next

months

with

the

integration

of

the

technology

in

multiple

services,

for

example

through

the

use of

ChatGPT

API.

Employees

believe

that

the

artificial

intelligence

chatbot

developed

by

OpenAI

can

significatively

improve

their

productivity.

The

experts

also

warn

that

enterprise

security

software

cannot

monitor

the

use

of

ChatGPT

by

employees

and

prevent

the

leak

of

sensitive/confidential

company

data.

“Since

ChatGPT

launched

publicly,

5.6%

of

knowledge

workers

have

tried

using

it

at

least

once

in

the

workplace.

Furthermore,

2.3%

of

employees

have

put

confidential

company

data

into

ChatGPT.”

reads

the

report

published

by

Cyberhaven

Labs.

“Despite

a

growing

number

of

companies

outright

blocking

access

to

ChatGPT,

usage

continues

to

grow

exponentially.

On

March

1,

our

product

detected

a

record

3,381

attempts

to

paste

corporate

data

into

ChatGPT

per

100,000

employees,

defined

as

“data

egress”

events

in

the

chart

below.”

The

researchers

also

monitored

workers

copying

data

out

of

the

popular

chatbot

and

pasting

it

elsewhere,

like

a

company

email,

Google

Doc,

or

their

source

code

editor.

They

noticed

that

employees

copy

data

out

of

the

chatbot

more

than

they

paste

company

data

into

ChatGPT

at

a

nearly

2-to-1

ratio.

The

researchers

pointed

out

that

the

average

company

leaks

sensitive

data

to

ChatGPT

hundreds

of

times

each

week.

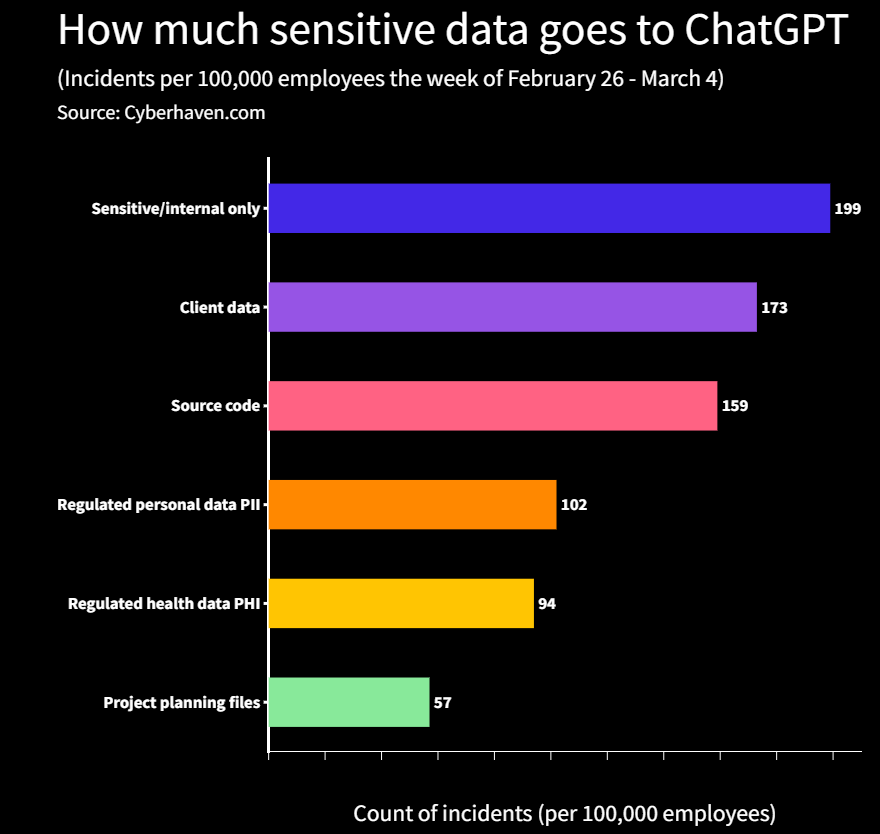

For

example,

during

the

week

of

February

26

–

March

4,

workers

at

the

average

company

with

100,000

employees

provided

confidential

documents

into

the

AI-based

chatbot

199

times,

client

data

173

times,

and

source

code

159

times.

We

cannot

demonize

the

use

of

the

popular

platform

in

the

workplace,

however,

it

is

essential

to

spread

awareness

about

the

risks

of

the

improper

use

of

such

technology

Let

me

close

with

this

question

I

made

to

the

chatbot:

How

risky

is

it

to

provide

company

data

to

ChatGPT?

“As

a

large

language

model,

ChatGPT

doesn’t

have

the

ability

to

store

or

acquire

company

or

personal

data.

This

means

that

there

is

no

inherent

risk

in

using

ChatGPT

to

discuss

general

issues

or

ask

knowledge-related

questions.

However,

if

you

provide

confidential

or

sensitive

information

about

a

company

or

person,

that

information

could

be

vulnerable

to

potential

security

or

privacy

threats

associated

with

online

communication.

In

general,

it’s

always

important

to

exercise

due

diligence

when

discussing

sensitive

information

online.

In

summary,

ChatGPT

doesn’t

represent

a

risk

to

the

security

of

company

data

in

itself,

but

it’s

always

important

to

be

mindful

of

online

privacy

and

security

and

carefully

consider

what

kind

of

information

you

share

on

a

chatbot

platform.”

Follow

me

on

Twitter:

@securityaffairs

and

Facebook

and

Mastodon

(SecurityAffairs –

hacking,

AI)

%20v1.1.jpg#keepProtocol)