VoidLink Linux Malware Framework Built with AI Assistance Reaches 88,000 Lines of Code

The recently discovered sophisticated Linux malware framework known as VoidLink is assessed to have been developed by a single person with assistance from an artificial intelligence (AI) model.

That’s according to new findings from Check Point Research, which identified operational security blunders by malware’s author that provided clues to its developmental origins. The latest insight makes VoidLink one of the first instances of an advanced malware largely generated using AI.

“These materials provide clear evidence that the malware was produced predominantly through AI-driven development, reaching a first functional implant in under a week,” the cybersecurity company said, adding it reached more than 88,000 lines of code by early December 2025.

VoidLink, first publicly documented last week, is a feature-rich malware framework written in Zig that’s specifically designed for long-term, stealthy access to Linux-based cloud environments. The malware is said to have come from a Chinese-affiliated development environment. As of writing, the exact purpose of the malware remains unclear. No real-world infections have been observed to date.

A follow-up analysis from Sysdig was the first to highlight the fact that the toolkit may have been developed with the help of a large language model (LLM) under the directions of a human with extensive kernel development knowledge and red team experience, citing four different pieces of evidence –

- Overly systematic debug output with perfectly consistent formatting across all modules

- Placeholder data (“John Doe”) is typical of LLM training examples embedded in decoy response templates

- Uniform API versioning where everything is _v3 (e.g., BeaconAPI_v3, docker_escape_v3, timestomp_v3)

- Template-like JSON responses covering every possible field

“The most likely scenario: a skilled Chinese-speaking developer used AI to accelerate development (generating boilerplate, debug logging, JSON templates) while providing the security expertise and architecture themselves,” the cloud security vendor noted late last week.

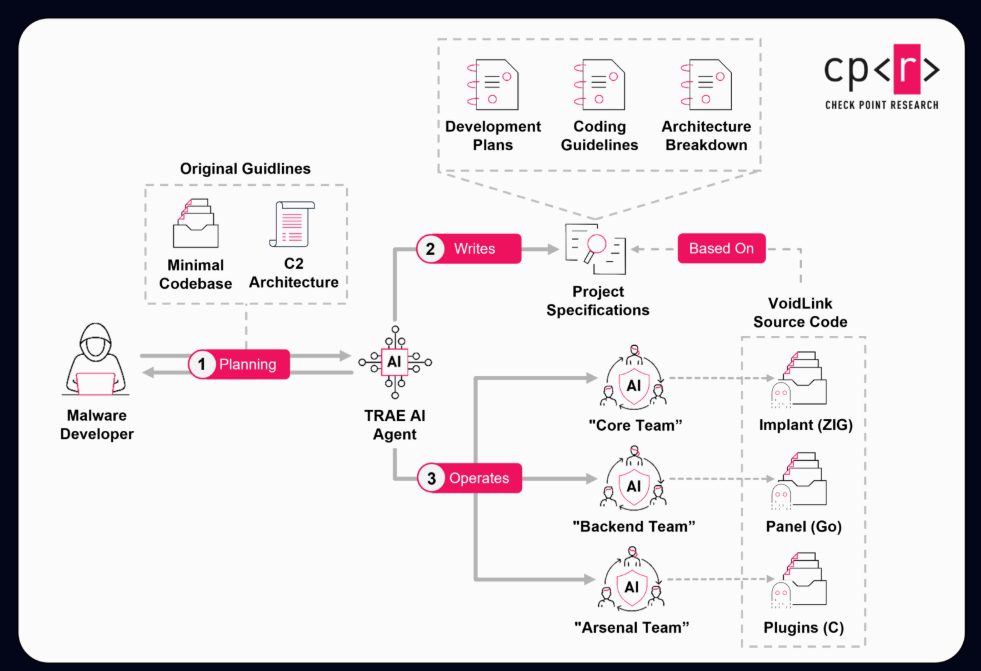

Check Point’s Tuesday report backs up this hypothesis, stating it identified artifacts suggesting that the development in itself was engineered using an AI model, which was then used to build, execute, and test the framework – effectively turning what was a concept into a working tool within an accelerated timeline.

|

| High-level overview of the VoidLink Project |

“The general approach to developing VoidLink can be described as Spec Driven Development (SDD),” it noted. “In this workflow, a developer begins by specifying what they’re building, then creates a plan, breaks that plan into tasks, and only then allows an agent to implement it.”

It’s believed that the threat actor commenced work on the VoidLink in late November 2025, leveraging a coding agent known as TRAE SOLO to carry out the tasks. This assessment is based on the presence of TRAE-generated helper files that have been copied along with the source code to the threat actor’s server and later leaked in an exposed open directory.

In addition, Check Point said it uncovered internal planning material written in Chinese related to sprint schedules, feature breakdowns, and coding guidelines that have all the hallmarks of LLM-generated content — well-structured, consistently formatted, and meticulously detailed. One such document detailing the development plan was created on November 27, 2025.

The documentation is said to have been repurposed as an execution blueprint for the LLM to follow, build, and test the malware. Check Point, which replicated the implementation workflow using the TRAE IDE used by the developer, found that the model generated code that resembled VoidLink’s source code.

|

| Translated development plan for three teams: Core, Arsenal, and Backend |

“A review of the code standardization instructions against the recovered VoidLink source code shows a striking level of alignment,” it said. “Conventions, structure, and implementation patterns match so closely that it leaves little room for doubt: the codebase was written to those exact instructions.”

The development is yet another sign that, while AI and LLMs may not equip bad actors with novel capabilities, they can further lower barrier of entry to malicious actors, empowering even a single individual to envision, create, and iterate complex systems quickly and pull off sophisticated attacks — streamlining what was once a process that required a significant amount of effort and resources and available only to nation-state adversaries.

“VoidLink represents a real shift in how advanced malware can be created. What stood out wasn’t just the sophistication of the framework, but the speed at which it was built,” Eli Smadja, group manager at Check Point Research, said in a statement shared with The Hacker News.

“AI enabled what appears to be a single actor to plan, develop, and iterate a complex malware platform in days – something that previously required coordinated teams and significant resources. This is a clear signal that AI is changing the economics and scale of cyber threats.”

In a whitepaper published this week, Group-IB described AI as supercharging a “fifth wave” in the evolution of cybercrime, offering ready-made tools to enable sophisticated attacks. “Adversaries are industrialising AI, turning once specialist skills such as persuasion, impersonation, and malware development into on-demand services available to anyone with a credit card,” it said.

The Singapore-headquartered cybersecurity company noted that dark web forum posts featuring AI keywords have seen a 371% increase since 2019, with threat actors advertising dark LLMs like Nytheon AI that do not have any ethical restrictions, jailbreak frameworks, and synthetic identity kits offering AI video actors, cloned voices, and even biometric datasets for as little as $5.

“AI has industrialized cybercrime. What once required skilled operators and time can now be bought, automated, and scaled globally,” Craig Jones, former INTERPOL director of cybercrime and independent strategic advisor, said.

“While AI hasn’t created new motives for cybercriminals — money, leverage, and access still drive the ecosystem – it has dramatically increased the speed, scale, and sophistication with which those motives are pursued.”