Why and how to create corporate genAI policies

As a large number of companies continue to test and deploy generative artificial intelligence (genAI) tools, many are at risk of AI errors, malicious attacks, and running afoul of regulators — not to mention the potential exposure of sensitive data.

As a large number of companies continue to test and deploy generative artificial intelligence (genAI) tools, many are at risk of AI errors, malicious attacks, and running afoul of regulators — not to mention the potential exposure of sensitive data.

For example, in April, after Samsung’s semiconductor division allowed engineers to use ChatGPT, workers using the platform leaked trade secrets on least three instances, according to published accounts. One employee pasted confidential source code into the chat to check for errors, while another worker shared code with ChatGPT and “requested code optimization.”

ChatGPT is hosted by its developer, OpenAI, which asks users not to share any sensitive information because it cannot be deleted.

“It’s almost like using Google at that point,” said Matthew Jackson, global CTO at systems integration provider Insight Enterprises. “Your data is being saved by OpenAI. They’re allowed to use whatever you put into that chat window. You can still use ChatGPT to help write generic content, but you don’t want to paste confidential information into that window.”

The bottom line is that large language models (LLMs) and other genAI applications “are not fully baked,” according to Avivah Litan, a vice president and distinguished Gartner analyst. “They still have accuracy issues, liability and privacy concerns, security vulnerabilities, and can veer off in unpredictable or undesirable directions,” she said, “but they are entirely usable and provide an enormous boost to productivity and innovation.”

A recent Harris Poll found that business leaders’ top two reasons for rolling out genAI tools over the next year are to increase revenue and drive innovation. Almost half (49%) said keeping pace with competitors on tech innovation is a top challenge this year. (The Harris Poll surveyed 1,000 employees employed as directors or higher between April and May 2023.)

Those polled named employee productivity (72%) as the greatest benefit of AI, with customer engagement (via chatbots) and research and development taking second and third, respectively.

The Harris Poll/Insight

The Harris Poll/InsightAI adoption explodes

Within the next three years, most business leaders expect to adopt genAI to make employees more productive and enhance customer service, according to separate surveys by consultancy Ernst & Young (EY) and research firm The Harris Poll. And a majority of CEOs are integrating AI into products/services or planning to do so within 12 months.

“No corporate leader can ignore AI in 2023,” EY said in its survey report. “Eighty-two percent of leaders today believe organizations must invest in digital transformation initiatives, like generative AI, or be left behind.”

About half of respondents to The Harris Poll, which was commissioned by systems integration services vendor Insight Enterprises, indicated they’re embracing AI to ensure product quality and to address safety and security risks.

Forty-two percent of US CEOs surveyed by EY said they have already fully integrated AI-driven product or service changes into their capital allocation processes and are actively investing in AI-driven innovation, while 38% say they plan to make major capital investments in the technology over the next 12 months.

Insight

InsightJust over half (53%) of those surveyed expect to use genAI to assist with research and development, and 50% plan to use it for software development/testing, according to The Harris Poll.

While C-suite leaders recognize the importance of genAI, they also remain wary. Sixty-three percent of CEOs in the EY poll said it is a force for good and can drive business efficiency, but 64% believe not enough is being done to manage any unintended consequences of genAI use on business and society.

In light of the “unintended consequences of AI,” eight in 10 organizations have either put in place AI policies and strategies or are considering doing so, according to both polls.

AI problems and solutions

Generative AI was the second most-frequently named risk in Gartner’s second quarter survey, appearing in the top 10 for the first time, according to Ran Xu director, research in Gartner’s Risk & Audit Practice.

“This reflects both the rapid growth of public awareness and usage of generative AI tools, as well as the breadth of potential use cases, and therefore potential risks, that these tools engender,” Xu said in a statement.

Hallucinations, in which genAI apps present facts and data that look accurate and factual but are not, are a key risk. AI outputs are known to inadvertently infringe on the intellectual property rights of others. The use of genAI tools can raise privacy issues, as they may share user information with third parties, such as vendors or service providers, without prior notice. Hackers are using a method known as “prompt injection attacks” to manipulate how a large language model responds to queries.

“That’s one potential risk in that people may ask it a question and assume the data is correct and go off and make some important business decision with inaccurate data,” Jackson said. “That was the number one concern — using bad data. Number two in our survey was security.”

The Harris Poll/Insight

The Harris Poll/InsightThe problems organizations face when deploying genAI, Litan explained, lie in three main categories:

- Input and output, which includes unacceptable use that compromises enterprise decision-making and confidentiality, leaks of sensitive data, and inaccurate outputs (including hallucinations).

- Privacy and data protection, which includes data leaks via a hosted LLM vendor’s system, incomplete data privacy or protection policies, and a failure to meet regulatory compliance rules.

- Cybersecurity risks, which include hackers accessing LLMs and their parameters to influence AI outputs.

Mitigating those kinds of threats, Litan said, requires a layered security and risk management approach. There are several different ways organizations can reduce the prospect of unwanted or illegitimate inputs or outputs.

First, organizations should define policies for acceptable use and establish systems and processes to record requests to use genAI applications, including the intended use and the data being requested. GenAI application use should also require approvals by various overseers.

Organizations can also use input content filters for information submitted to hosted LLM environments. This helps screen inputs against enterprise policies for acceptable use.

Privacy and data protection risks can be mitigated by opting out of hosting a prompt data storage, and by making sure a vendor doesn’t use corporate data to train its models. Additionally, companies should comb through a hosting vendor’s licensing agreement, which defines the rules and its responsibility for data protection in its LLM environment.

Gartner

GartnerLastly, organizations need to be aware of prompt injection attacks, which is a malicious input designed to trick a LLM into changing its desired behavior. That can result in stolen data or customers being scammed by the generative AI systems.

Organizations need strong security around the local Enterprise LLM environment, including access management, data protection, and network and endpoint security, according to Gartner.

Litan recommends that genAI users deploy Security Service Edge software that combines networking and security together into a cloud-native software stack that protects an organization’s edges, its sites and applications.

Additionally, organizations should hold their LLM or genAI service providers accountable for how they prevent indirect prompt injection attacks on their LLMs, over which a user organization has no control or visibility.

AI’s advantages can outweigh its risks

One mistake companies make is to decide that it’s not worth the risk to use AI, so “the first policy most companies come up with is ‘don’t use it,’” Insight’s Jackson said.

“That was our first policy as well,” he said. “But we very quickly stood up a private tenant using Microsoft’s OpenAI on Azure’s technology. So, we created an environment that was secure, where we were able to connect to some of our private enterprise data. So, that way we could allow people to use it.”

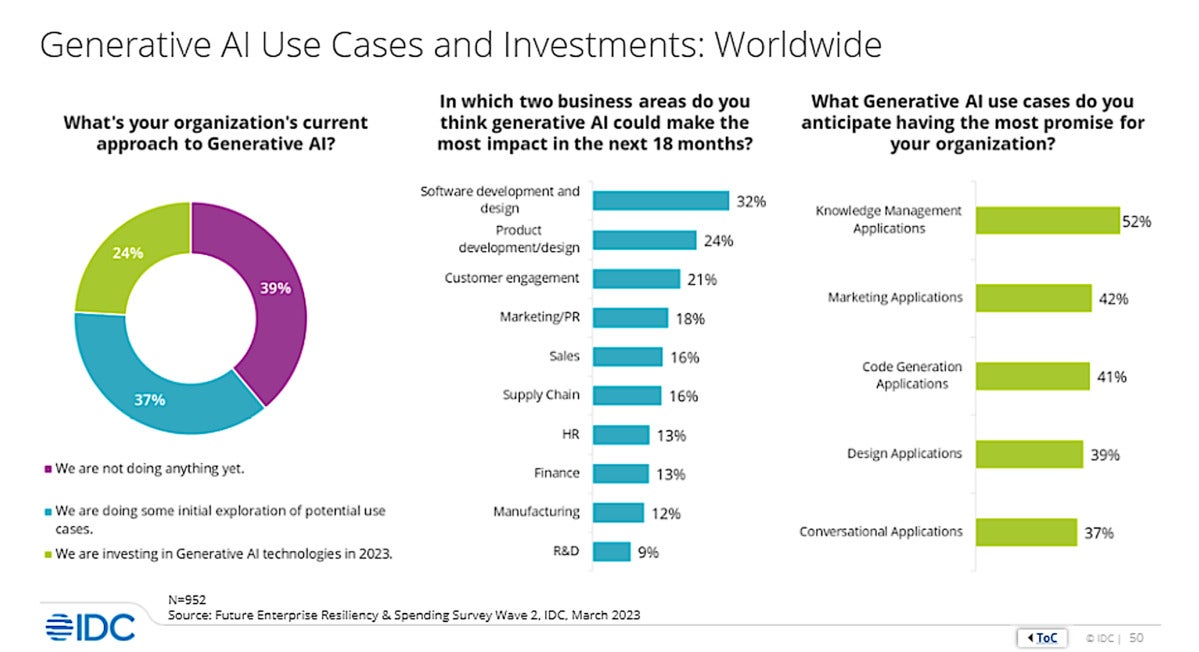

IDC

IDCOne Insight employee described the generative AI technology as being like Excel. “You don’t ask people how they’re going to use Excel before you give it to them; you just give it to them and they come up with all these creative ways to use it,” Jackson said.

Insight ended up talking to a lot of clients about genAI use cases considering the firm’s own experiences with the technology.

“One of the things that dawned on us with some of our pilots is AI’s really just a general productivity tool. It can handle so many use cases,” Jackson said. “…What we decided [was] rather than going through a long, drawn-out process to overly customize it, we were just going to give it out to some departments with some general frameworks and boundaries around what they could and couldn’t do — and then see what they came up with.”

One of the first tasks Insight Enterprises used ChatGPT for was in its distribution center, where clients purchase technology and the company then images those devices and sends them out to clients; the process is filled with mundane tasks, such as updating product statuses and supply systems.

“So, one of the folks in one of our warehouses realized if you can ask generative AI to write a script to automate some of these system updates,” Jackson said. “This was a practical use case that emerged from Insight’s crowd-sourcing of its own private, enterprise instance of ChatGPT, called Insight GPT, across the organization.”

The generative AI program wrote a short Python script for Insight’s warehouse operation that automated a significant number of tasks, and enabled system updates that could run against its SAP inventory system; it essentially automated a task that took people five minutes every time they had to make an update.

“So, there was a huge productivity improvement within our warehouse. When we rolled it out to the rest of the employees in that center, hundreds of hours a week were saved,” Jackson said.

Now, Insight is focusing on prioritizing critical use cases that may require more customization. That could include using prompt engineering to train the LLM differently or tying in more diverse or complicated back-end data sources.

Jackson described LLMs as a pretrained “black box,” with data they’re trained on typically a couple years old and excluding corporate data. Users can, however, instruct APIs to access corporate data like an advanced search engine. “So, that way you get access to more relevant and current content,” he said.

Insight is currently working with ChatGPT on a project to automate how contracts are written. Using a standard ChatGPT 4.0 model, the company connected it to its existing library of contracts, of which it has tens of thousands.

Organizations can use LLM extensions such as LangChain or Microsoft’s Azure Cognitive Search to discover corporate data relative to a task given the generative AI tool.

In Insight’s case, genAI will be used to discover which contracts the company won, prioritize those, and then cross-reference them against CRM data to automate writing future contracts for clients.

Some data sources, such as standard SQL databases or libraries of files, are easy to connect to; others, such as AWS cloud or custom storage environments, are more difficult to access securely.

“A lot of people think you need to retrain the model to get their own data into it, and that’s absolutely not the case; that can actually be risky, depending on where that model lives and how it’s executed,” Jackson said. “You can easily stand up one of these OpenAI models within Azure and then connect in your data within that private tenant.”

“History tells us if you give people the right tools, they become more productive and discover new ways to work to their benefit,” Jackson added. “Embracing this technology gives employees an unprecedented opportunity to evolve and elevate how they work and, for some, even discover new career paths.”