Western Australian agencies are assessing all their existing and planned automated decision-making and artificial intelligence projects under a mandatory risk regime that covers a broad range of technologies.

Minister for Innovation and the Digital Economy Stephen Dawson told iTnews that the AI Assurance Framework’s “primary goal” is “to enable safe and responsible use of AI by the WA public sector” and to “create the enabling environment for innovation to occur.”

“The framework aims to assist public servants in complying with the WA AI policy through the implementation of risk mitigation strategies and by establishing clear governance and accountability measures,” he said.

WA’s AI Advisory Board also reviews and provides feedback on any project that still has midrange or higher risk/s after implementing mitigation controls during the internal assessment, or if the project was either financed through WA’s digital capability fund or cost more than $5 million.

Managing AI risk

Internal assessments identify the AI or decision-making project’s risks by measuring its potential to undermine fairness, explainability and accuracy in decision-making among other AI ethics principles [pdf] that the framework aims to uphold.

“The framework provides a systematic approach for agencies to assess their AI systems, projects and data-driven tools against,” Dawson said.

“It is underpinned by five ethics principles and steps the user through questions pertaining to each principle.

“By utilising this logical and systematic approach, agencies can conduct their own assessments and rigorously manage risks as needed.”

In the second phase, agencies pilot risk mitigation controls such as embedding more human intervention into AI-assisted operations, reviewing the model’s training data or applying more tests to validate its output.

After implementing the mitigation strategies, the agency re-evaluates the project’s risks.

A wide range of technologies

The scheme, which became effective earlier this month, covers systems that generate “predictive outputs such as content, forecasts, recommendations, or decisions for a given set of human-defined objectives or parameters without explicit programming.”

Based on the broad ISO/IEC 22989:2022 definition of AI, the scheme [pdf] specifies that it captures both fully and partially automated decision-making systems and is not limited to solutions with rules set by advanced self-correcting processes like random forest models or neural networks.

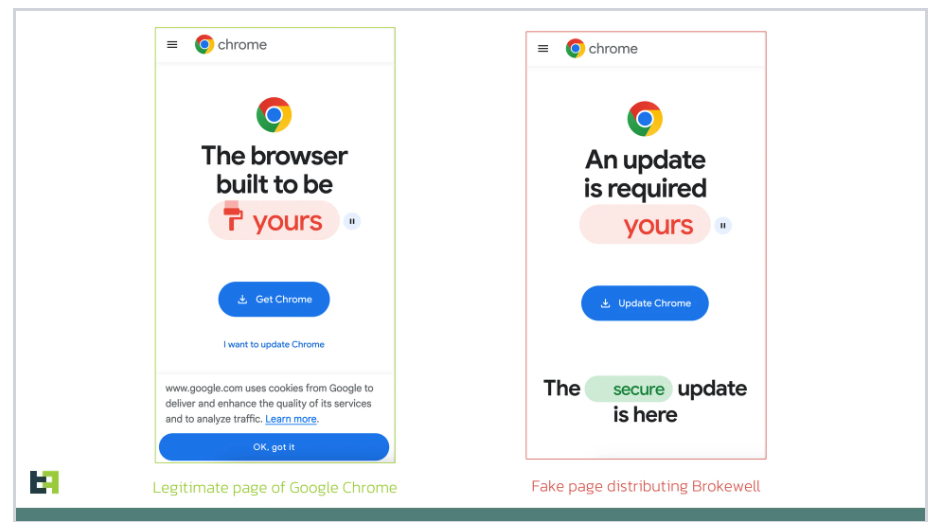

Consistent with lessons the federal government learnt from Robodebt, “rules-based automation” systems that “[do] not learn or adapt” still need to be risk-assessed, as do “generic AI platform[s]” that the agencies co-develop or train with their “own data”.

The framework is likely to prompt far more assessments and external reviews than NSW’s AI risk regime, which mandates similar assurance checks but has a narrower scope.

The NSW scheme is not retrospective whereas WA public servants’ instructions [pdf] are to assess “existing AI solutions and seek review by the WA AI Advisory Board where necessary.”

And, unlike the NSW regime, the WA scheme has no exemption for systems with “widely available commercial application…not being customised in any way”; WA agencies must assess the use of common tools like large language models [pdf].

Earlier this month, the NSW Ombudsman confirmed 275 public sector AI and automated decision tools and identified a further 702 in an OSINT review, however, in the NSW scheme’s first year-and-a-half it only externally reviewed 14 projects, according to documents [pdf] iTnews obtained through Freedom of Information laws.

Other states commit to AI risk regime

Establishing “an initial national framework for the assurance of artificial intelligence used by governments” was agreed to at a meeting between state and territory governments in February.

Except for Tasmania and the Northern Territory, all Data and Digital Ministers attended and signed a joint statement outlining that the proposed framework “aligns with the Australian AI Ethics Principles and includes common assurance processes.”

The NSW government’s AI Assurance Framework is currently under review; it was the first scheme mandating risk assessment of government AI in Australia when it launched in 2022.

On March 11, the NSW government chief information and digital officer Laura Christie told the state’s inquiry into AI that an “updated AI Assurance Framework will be released in late 2024.”

Dawson told iTnews that WA’s framework will also be regularly updated to keep up with advances in AI.

“The Office of Digital Government will continue to update the WA AI Policy materials and guidance to ensure they remain contemporary as the AI landscape evolves,” Dawson said.

“This includes working collaboratively with other Australian states and territories to ensure best practice is leveraged, and that there is alignment across jurisdictions particularly if regulatory approaches are developed at the Commonwealth and/or state government level.”