OpenAI, Anthropic Research Reveals More About How LLMs Impact Security and Bias

Given that large language models function using structures akin to neurons that might connect various concepts and modalities, it can pose a challenge for AI developers to modify their models to alter the behavior of the models. If the connections between different concepts are not known, identifying which connections to modify becomes complex.

Anthropic recently unveiled a highly detailed map of the internal workings of the refined edition of its Claude AI, specifically the Claude 3 Sonnet 3.0 model, which was brought to light on May 21. Approximately two weeks later, OpenAI shared its own study on unraveling how GPT-4 interprets patterns.

Through Anthropic’s map, researchers can delve into how feature points resembling neurons, influence a generative AI’s outcomes. Otherwise, individuals are restricted to merely observing the outcomes themselves.

Some of these features are categorized as “safety critical.” Identifying these features consistently could aid in adjusting generative AI to steer clear of potentially risky subjects or activities. These features play a pivotal role in adapting classification, which in turn could influence bias mitigation.

What did Anthropic uncover?

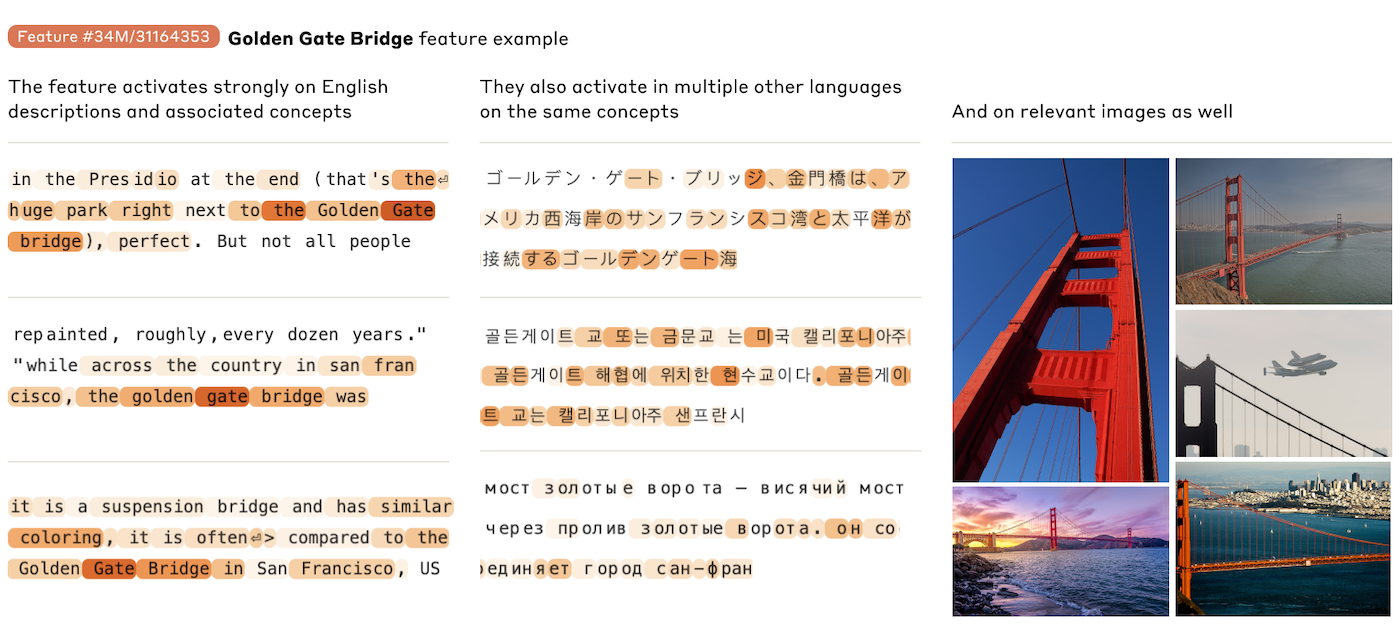

The researchers at Anthropic decoded interpretable features from Claude 3, a contemporary large language model. Interpretable features can be translated into human-understandable concepts from the numerical data processed by the model.

Interpretable features can be relevant to a single concept across various languages and are applicable to both images and texts.

“Our primary aim in this endeavor is to deconstruct the model’s (Claude 3 Sonnet) activations into more interpretable segments,” expressed the researchers.

“One expectation with interpretability is that it can serve as a form of ‘safety test set,’ allowing us to discern whether models appearing safe during training will indeed remain safe during deployment,” they elaborated.

EXPLORE: Anthropic’s Claude Team corporate plan offers an AI assistant tailored for small-to-medium enterprises.

Features are generated by sparse autoencoders, a neural network architecture. During the AI training phase, sparse autoencoders are steered by various factors, including scaling laws. Therefore, identifying features enables researchers to gain insights into the regulations governing the interrelation of topics associated by the AI. In simple terms, Anthropic utilized sparse autoencoders to unveil and analyze features.

“We uncovered a range of profoundly abstract features,” the researchers conveyed. “They (the features) both react to and instigate abstract behaviors.”

The intricacies of the hypotheses employed in trying to discern the underlying mechanisms of LLMs can be accessed through Anthropic’s research document.

What did OpenAI unveil?

OpenAI’s research, disclosed on June 6, delves into sparse autoencoders. The researchers delve into the details within their paper on scaling and evaluating sparse autoencoders; basically, the objective is to render features more comprehensible — hence more controllable — for humans. They are gearing up for a future where “frontier models” may be even more intricate than contemporary generative AI.

“We applied our methodology to train a variety of autoencoders on GPT-2 small and GPT-4 activations, incorporating a 16 million feature autoencoder on GPT-4,” stated OpenAI.

Thus far, they are unable to decipher all behaviors of GPT-4: “At present, passing GPT-4’s activations through the sparse autoencoder yields a performance equivalent to a model trained with approximately 10 times lower computational power.” Nevertheless, this study marks another stride toward comprehending the enigmatic nature of generative AI and could potentially enhance its security.

How altering features influences bias and cybersecurity

Anthropic identified three distinct features that could hold significance in cybersecurity: unsafe code, code glitches, and backdoors. These features might get activated in discussions unrelated to unsafe code; for instance, the backdoor feature activates in conversations or visuals regarding “covert cameras” and “jewelry with a concealed USB drive.” Nonetheless, Anthropic managed to experiment with “clamping” — in essence, boosting or reducing the intensity of — these specific features, potentially aiding in adjusting models to steer clear of or handle sensitive security topics tactfully.

Bias or offensive language in Claude can be fine-tuned utilizing feature clamping, albeit Claude might resist against some of its own statements. When the researchers amplified a feature linked to hatred and slurs to 20 times its maximum activation value, Claude exhibited a response characterized by “self-deprecation,” which the researchers found disconcerting.

Another feature analyzed by the researchers is sycophancy; they were able to modify the model to excessively praise the individual conversing with it.

What implications do studies on AI autoencoders have on business cybersecurity?

Identifying some of the features utilized by an LLM to correlate concepts could enable tuning an AI to prevent biased utterances or to curb scenarios where the AI might be compelled to deceive the user. Deeper comprehension by Anthropic into why LLMs exhibit their behavior could offer more tuning options for Anthropic’s corporate customers.

READ: 8 AI Business Trends, According to Stanford Researchers

Anthropic plans to leverage this research to further explore subjects concerning the security of generative AI and LLMs as a whole, such as investigating which features activate or remain dormant if Claude is prompted to advise on manufacturing weapons.

Another avenue of exploration for Anthropic in the future is questioning: “Can we utilize the feature framework to ascertain when fine-tuning a model raises the likelihood of undesirable behaviors?”

TechRepublic has approached Anthropic for additional insights. Additionally, this article was revised to incorporate OpenAI’s research on sparse autoencoders.