A

number

of

experiments

suggest

that

ChatGPT,

the

popular

large

language

model

(LLM),

could

be

useful

to

help

defenders

triage

potential

security

incidents

and

find

security

vulnerabilities

in

code,

even

though

the

artificial

intelligence

(AI)

model

was

not

specifically

trained

for

such

activities,

according

to

results

released

this

week.

In

a

Feb.

15

analysis

of

ChatGPT’s

utility

as

an

incident

response

tool,

Victor

Sergeev,

incident

response

team

lead

at

Kaspersky,

found

that

ChatGPT

could

identify

malicious

processes

running

on

compromised

systems.

Sergeev

infected

a

system

with

the

Meterpreter

and

PowerShell

Empire

agents,

took

common

steps

in

the

role

of

an

adversary,

and

then

ran

a

ChatGPT-powered

scanner

against

the

system.

The

LLM

identified

two

malicious

processes

running

on

the

system,

and

correctly

ignored

137

benign

processes,

potentially

reducing

overhead

to

a

significant

degree,

he

wrote

in

a

blog

post

describing

the

experiment.

“ChatGPT

successfully

identified

suspicious

service

installations,

without

false

positives,”

Sergeev

wrote.

“For

the

second

service,

it

provided

a

conclusion

about

why

the

service

should

be

classified

as

an

indicator

of

compromise.”

Security

researchers

and

AI

hackers

have

all

taken

an

interest

in

ChatGPT,

probing

the

LLM

for

weaknesses,

while

other

researchers,

as

well

as

cybercriminals,

have

attempted

to

lure

the

LLM

to

the

dark

side,

setting

it

to

produce

better

phishing

emails

messages

or

generate

malware.

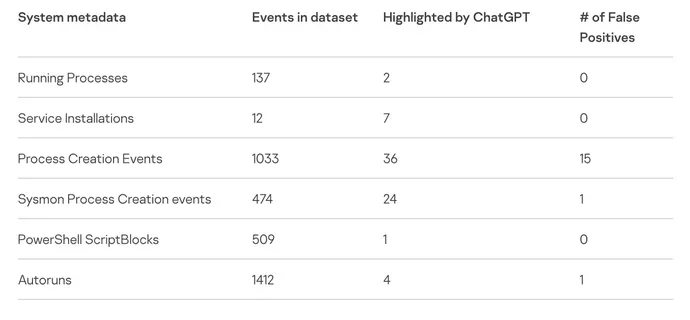

found

indicators

of

compromise

with

some

false

positives.

Source:

Kaspersky

Yet

security

researchers

are

also

looking

at

how

the

generalized

language

model

performs

on

specific

defense-related

tasks.

In

December,

digital

forensics

firm

Cado

Security

used

ChatGPT

to

create

a

timeline

of

a

compromise

using

JSON

data

from

an

incident,

which

produced

a

good

—

but

not

totally

accurate

—

report.

Security

consultancy

NCC

Group

experimented

with

ChatGPT

as

a

way

to

find

vulnerabilities

in

code,

which

it

did,

but

not

always

accurately.

The

conclusion

is

that

security

analysts,

developers,

and

reverse

engineers

need

to

take

care

whenever

using

LLMs,

especially

for

tasks

outside

the

scope

of

their

capabilities,

says

Chris

Anley,

chief

scientist

at

security

consultancy

NCC

Group.

“I

definitely

think

that

professional

developers,

and

other

folks

who

work

with

code

should

explore

ChatGPT

and

similar

models,

but

more

for

inspiration

than

for

absolutely

correct,

factual

results,”

he

says,

adding

that

“security

code

review

isn’t

something

we

should

be

using

ChatGPT

for,

so

it’s

kind

of

unfair

to

expect

it

to

be

perfect

first

time

out.”

Analyzing

IoCs

With

AI

The

Kaspersky

experiment

started

with

asking

ChatGPT

about

several

hackers’

tools,

such

as

Mimikatz

and

Fast

Reverse

Proxy.

The

AI

model

successfully

described

those

tools,

but

when

requested

to

identify

well-known

hashes

and

domains,

it

failed.

The

LLM

could

not

identify

a

well-known

hash

of

the

WannaCry

malware,

for

example.

The

relative

success

of

identifying

malicious

code

on

the

host,

however,

led

Kasperky’s

Sergeev

to

ask

ChatGPT

to

create

a

PowerShell

script

to

collect

metadata

and

indicators

of

compromise

from

a

system

and

submit

them

to

the

LLM.

After

improving

the

code

manually,

Sergeev

used

the

script

on

the

infected

test

system.

Overall,

the

Kaspersky

analyst

used

ChatGPT

to

analyze

the

metadata

for

more

than

3,500

events

on

the

test

system,

finding

74

potential

indicators

of

compromise,

17

of

which

were

false

positives.

The

experiment

suggests

that

ChatGPT

could

be

useful

for

collecting

forensics

information

for

companies

that

are

not

running

an

endpoint

detection

and

response

(EDR)

system,

detecting

code

obfuscation,

or

reverse

engineering

code

binaries.

Sergeev

also

warned

that

inaccuracies

are

a

very

real

problem.

“Beware

of

false

positives

and

false

negatives

that

this

can

produce,”

he

wrote.

“At

the

end

of

the

day,

this

is

just

another

statistical

neural

network

prone

to

producing

unexpected

results.”

In

its

analysis,

Cado

Security

warned

that

ChatGPT

typically

does

not

qualify

the

confidence

of

its

results.

“This

is

a

common

concern

with

ChatGPT

that

OpenAI

[has]

raised

themselves

—

it

can

hallucinate,

and

when

it

does

hallucinate,

it

does

so

with

confidence,”

Cado’s

analysis

stated.

Fair

Use

and

Privacy

Rules

Need

Clarifying

The

experiments

also

raise

some

critical

issues

regarding

the

data

submitted

to

OpenAI’s

ChatGPT

system.

Already,

companies

have

started

taking

exception

to

the

creation

of

datasets

using

information

on

the

Internet,

with

companies

such

as

Clearview

AI

and

Stability

AI

facing

lawsuits

seeking

to

curtail

their

use

of

their

machine

learning

models.

Privacy

is

another

issue.

Security

professionals

have

to

determine

whether

submitted

indicators

of

compromise

expose

sensitive

data,

or

if

submitting

software

code

for

analysis

violates

a

company’s

intellectual

property,

says

NCC

Group’s

Anley.

“Whether

it’s

a

good

idea

to

submit

code

to

ChatGPT

depends

a

lot

on

the

circumstances,”

he

says.

“A

lot

of

code

is

proprietary

and

is

under

various

legal

protections,

so

I

wouldn’t

recommend

that

people

submit

code

to

third

parties

unless

they

have

permission

to

do

so.”

Sergeev

issued

a

similar

warning:

Using

ChatGPT

to

detect

compromise

sends

sensitive

data

to

the

system

by

necessity,

which

could

be

a

violation

of

company

policy

and

may

present

a

business

risk.

“By

using

these

scripts,

you

send

data,

including

sensitive

data,

to

OpenAI,”

he

stated,

“so

be

careful

and

consult

the

system

owner

beforehand.”