What People Really Ask AI About Cybersecurity (And Why It Should Worry CISOs)

What People Really Ask AI About Cybersecurity (And Why It Should Worry CISOs)

Table of Contents

- The New Public Forum

- The Undisputed Champion: “Is This Real?”

- The Anxiety of Adoption: “Is AI Safe with My Data?”

- The Craving for Simplicity: “How Do I Just Lock Everything Down?”

- The Career pivot: “How Do I Get Your Job?”

- The Missing Questions: What People Are Getting Wrong

- The 2026 Outlook: The Era of Hyper-Realistic Deception

The New Public Forum

Cybersecurity has moved out of the server room and into the living room.

In my work advising boards and speaking globally, I have noticed a distinct shift. The questions aren’t just coming from IT departments anymore. They are coming from CEOs, marketing managers, and my neighbors.

Now, with powerful AI tools integrated into daily life, millions are bypassing Google and turning to platforms like ChatGPT and Gemini as their primary source of truth. By analyzing the trends in these queries—triangulating data from industry reports, search trends, and professional forums—we get an unprecedented window into the collective cyber consciousness.

We can see exactly where awareness is high, where confusion reigns, and what is genuinely keeping people up at night. The results are both encouraging and alarming.

1. The Undisputed Champion: “Is This Real?” (Phishing)

Far and away, the most urgent, persistent query put to AI revolves around one thing: verifying reality.

“How do I know if this email/text/call is a scam?”

Despite billions spent on security infrastructure, the human element remains the primary target. People are bombarded daily, and they are using AI as an instant second opinion for suspicious “urgent invoices” or “delivery notifications.”

Why This Dominates

It’s not just the volume of attacks; it’s the evolution. Users are realizing that the old advice—”look for spelling errors”—is obsolete. They are encountering AI-generated phishing lures with perfect grammar, localized context, and frighteningly accurate personal details. They are turning to AI to defeat AI-generated scams.

💡 Dr. Ozkaya’s Expert Insight: The fact that this is the #1 question is actually positive. It means awareness training is working. People are pausing before clicking.

However, the danger shift is imminent. We are moving from “mass phishing” to “hyper-spear phishing.” In 2025, attackers won’t just spoof your CEO’s email; they will spoof their voice on a phone call using generative AI. Our defenses must move beyond “spotting typos” to mandatory out-of-band verification for any sensitive request.

2. The Anxiety of Adoption: “Is AI Safe with My Data?”

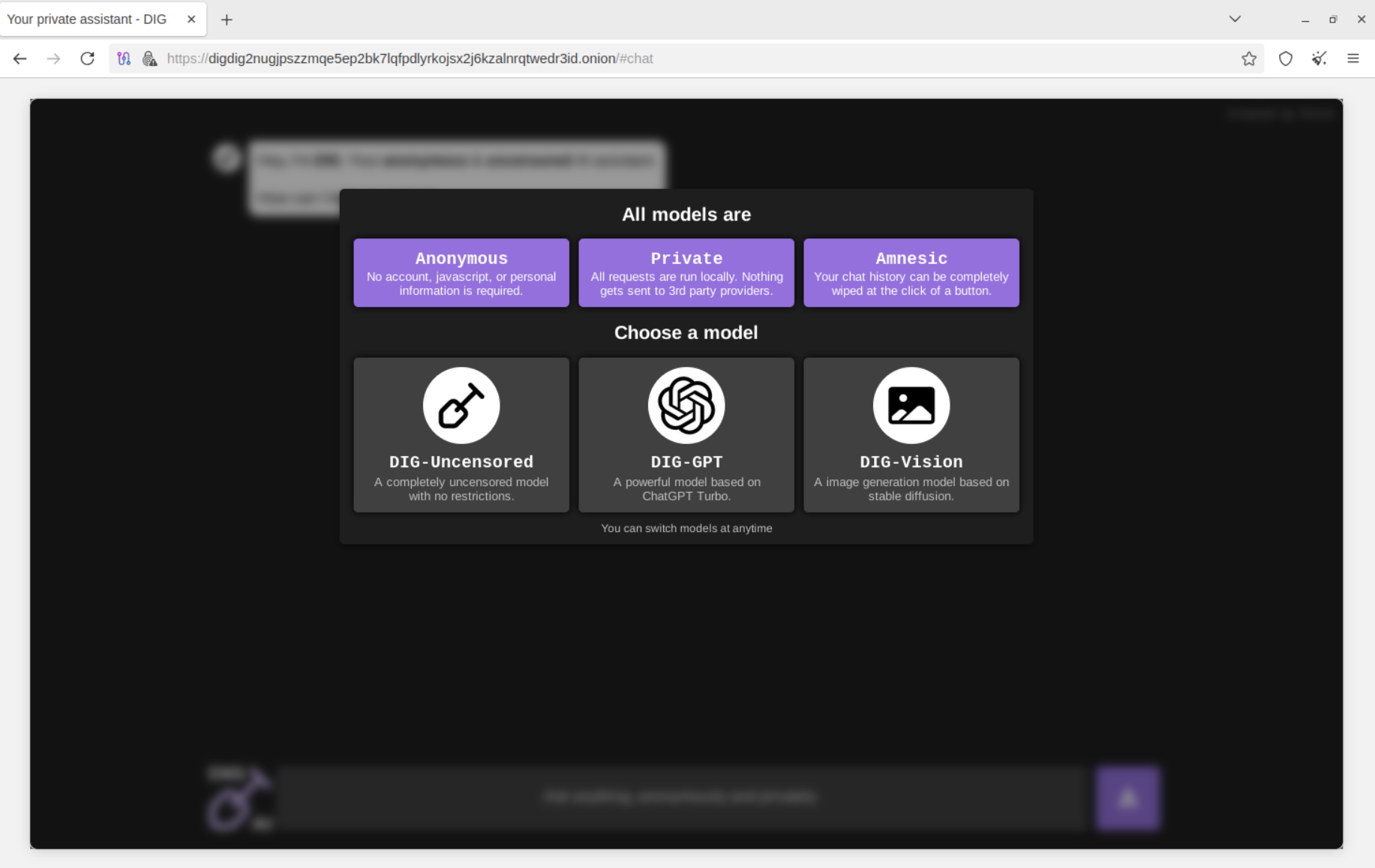

This is the smartest question trending right now. As employees pour sensitive data into LLMs to summarize reports or debug code, a wave of understandable anxiety has followed.

The Core Concerns

Users are rightly asking:

- Will my private chats be reviewed by humans?

- Is my company’s intellectual property being used to train the next public model?

- Can hackers manipulate the AI to give me bad advice?

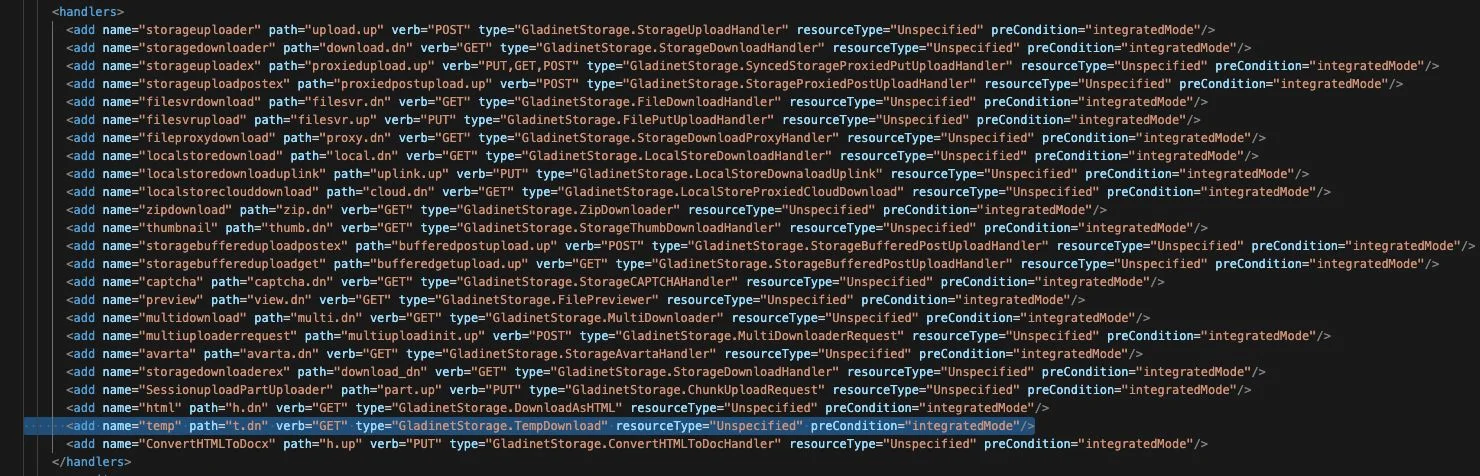

This tells us that the public is becoming aware of “Shadow AI”—the unsanctioned use of AI tools in the workplace.

💡 Dr. Ozkaya’s Expert Insight: For CISOs, this is your wake-up call. If your employees are asking ChatGPT how to secure data, it means your internal policies have failed to reach them. AI governance is no longer an optional add-on; it is a core pillar of cybersecurity, right alongside MFA. You need clear, red-line policies on what can and cannot be pasted into public prompts, delivered in plain language.

3. The Craving for Simplicity: “How Do I Just Lock Everything Down?”

When people feel overwhelmed by news of breaches and ransomware, they turn to AI to cut through the noise. They want actionable, digestible advice.

They aren’t asking about complex firewall architecture. They are asking:

- “Do I really need a password manager?” (Yes.)

- “How do I set up two-factor authentication?”

- “Is my home Wi-Fi easily hackable?”

The average user is tired of being frightened and wants to be empowered. The AI responses they receive are usually surprisingly solid: enable MFA everywhere, stop reusing passwords, and update your software.

4. The Career Pivot: “How Do I Get Your Job?”

A massive volume of queries comes from students and career changers eager to enter the high-demand cybersecurity field. They view AI as a personalized career counselor and technical mentor.

They are asking for certification roadmaps, interview role-play scenarios, and for AI to explain complex concepts like “Zero Trust” to them like they are five years old. They are using AI to accelerate their learning curve in ways previous generations couldn’t.

💡 Dr. Ozkaya’s Expert Insight: To these aspiring professionals: Do not rely on AI to do the work for you. Use it to help you understand the work.

I have seen an influx of junior analysts who can generate perfect code or reports using AI, but cannot explain why the code works or locate the flaw without assistance. AI is a force multiplier for an expert; it is a crutch for the unprepared. Focus on foundational skills—networking, Linux, human psychology—before relying on generative tools.

5. The Missing Questions: What People Are Getting Wrong

Perhaps more telling than what people are asking is what they aren’t asking. Based on my analysis of these trends, I see significant blind spots in the public understanding of cyber risk.

The Dangerous Assumption: People are asking, “Is this specific file has a virus?” The Reality: They assume if the AI says a file looks okay, they are safe. They are failing to ask about fileless malware, living-off-the-land attacks, or attacks that steal session tokens rather than deploying traditional viruses.

The Dangerous Assumption: People are asking, “How strong is my password?” The Reality: They aren’t asking enough about identity. In 2025, a strong password is useless if an attacker steals your browser’s session cookie and bypasses the login altogether. The public focus is still too heavily weighted on passwords rather than holistic identity security.

The 2026 Outlook: The Era of Hyper-Realistic Deception

Looking at these trends, we can map the trajectory for the next 18 months.

We are entering an arms race of artificial intelligence. Attackers will use AI to scale personalized social engineering to unprecedented levels, making phishing undetectable by human eyes. Defenders must counter by using AI to handle the massive volume of triage and signal detection.

For the average user, the lesson from these search trends is clear: The technical barrier to entry for cybercrime has lowered. Skepticism is your greatest asset.

For organizational leaders, the lesson is equally stark: If your security policies aren’t as accessible and helpful as ChatGPT, your employees will bypass you. It’s time to humanize security.

What People Really Ask AI About Cybersecurity (And Why It Should Worry CISOs)

What People Really Ask ChatGPT About Cybersecurity (and What It Means for 2025)

- The #1 Most Asked Cybersecurity Question on ChatGPT: “How Do I Avoid Phishing?”

- “Is ChatGPT Safe to Use?” The Second Most Asked Question

- How Do I Secure My Devices and Personal Data

- How Can AI Help Me With Cybersecurity

- How Do I Start a Career in Cybersecurity?

More articles about Cybersecurity