Web 3.0 Calls for Data Authenticity

Suppose you’ve participated in a computer security course previously. In that case, you’ve likely studied the three pillars of computer security—secrecy, genuineness, and accessibility—termed the CIA triad. When discussing a secure system, these are the factors we are alluding to. All hold significance, but to varying degrees based on different circumstances. In a world inhabited by artificial intelligence (AI) systems and artificially intelligent agents, the key focus will be on authenticity.

What does data authenticity entail? It involves ensuring that data remains unaltered—that’s the security component, but it encompasses much more than that. It covers precision, entirety, and excellence of data—over both time and area. It’s about preventing unintended data loss; the “undo” option is a basic authenticity measure. It also ensures that data remains accurate upon collection—that it originates from a reliable source, that nothing crucial is absent, and that it remains constant during transitions between different formats. The capability to restart your computer is another authenticity measure.

The CIA triad has progressed with the evolution of the Internet. The initial iteration of the Web—Web 1.0 during the 1990s and early 2000s—emphasized accessibility. This era witnessed organizations and individuals hurrying to digitize their content, establishing what has evolved into an unparalleled depository of human knowledge. Entities globally established their digital footprint, leading to extensive digitization endeavors where quantity took precedence over quality. The priority was on making information accessible, overshadowing other considerations.

As Web technologies advanced, the emphasis shifted towards safeguarding the vast data volumes traversing online networks. This marks Web 2.0: the present-day Internet. Interactive functionalities and user-generated content revolutionized the Web from a solely read platform to a participatory landscape. The surge in personal data along with the rise of interactive frameworks for e-commerce, social media, and varied online platforms necessitated a blend of data preservation and user confidentiality. Secrecy assumed a pivotal role.

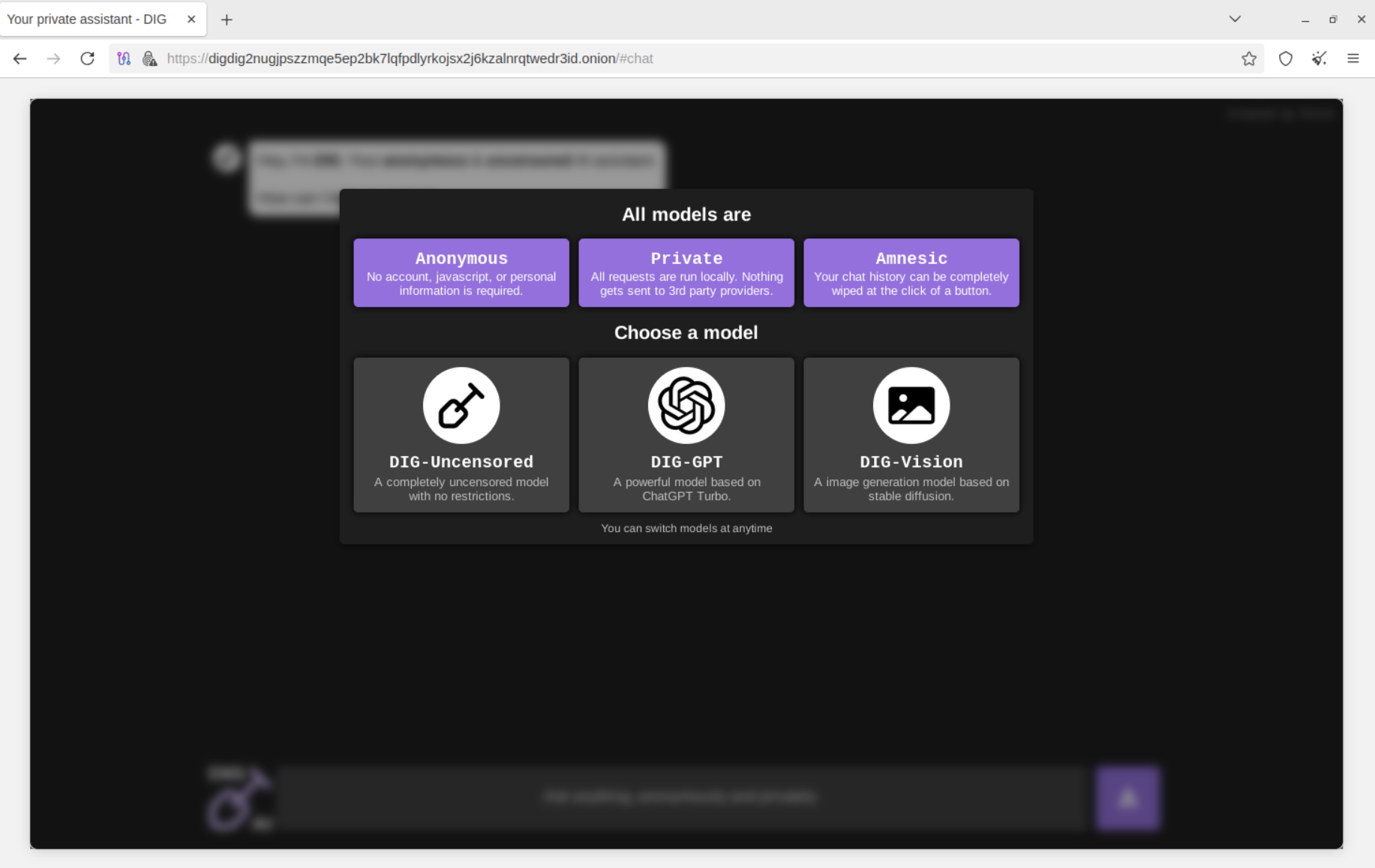

We find ourselves on the brink of a new internet era: Web 3.0. This ushers in a decentralized, intelligent Web. Peer-to-peer social-networking setups vow to shatter the tech giants’ dominance over our interaction modes. Tim Berners-Lee’s open W3C protocol, Solid, marks a fundamental change in perspectives regarding data ownership and control. A future enriched with AI agents demands truthful, dependable personal data and computation. In this scenario, data authenticity takes precedence.

For instance, the 5G communications revolution extends beyond mere expedited access to videos; it’s about interconnected devices communicating with each other autonomously. In the absence of data authenticity, real-time communication among vehicles regarding road shifts and statuses wouldn’t be feasible. There wouldn’t be drone fleet harmonization, sophisticated power grid management, or trustworthy mesh networks. Additionally, empowering AI agents securely would be unattainable.

Above all, AI systems demand robust authenticity mechanisms due to their data processing nature. This involves technical routines to guarantee data precision, maintenance of meaning throughout processing, generation of trustworthy outcomes, and provision for human rectification when necessary. Just as a scientific instrument must be aligned for precise reality measurement, AI systems require authenticity protocols that preserve the link between their data and actuality.

It transcends beyond deterring data manipulation. It involves constructing frameworks that retain verifiable chains of reliance between their inputs, processes, and outputs, enabling humans to comprehend and validate the AI’s operations. AI frameworks necessitate clear, coherent, and verifiable control mechanisms to learn and execute decisions effectively. Minus this foundation of reliable truth, AI systems risk evolving into enigmatic entities.

Recent incidents illustrate numerous integrity blunders that inevitably undermine societal trust in AI frameworks. Machine learning (ML) models trained indiscriminately on expansive datasets have resulted in predictably biased outcomes in recruitment systems. Autonomous vehicles laden with incorrect data have made flawed—and fatal—judgments. Medical diagnostic systems have dispensed flawed suggestions without explanatory backing. Absence of authenticity controls debilitates AI infrastructures and threatens those reliant on them.

These occurrences also shed light on how AI authenticity failures can surface at varied system operation tiers. At the training level, data may be subtly distorted or prejudiced even before model crafting commences. Regarding the model tier, numerical fundamentals and training processes might introduce new authenticity hitches even with clean data. In the execution phase, environmental transformations and real-time modifications can invalidate previously sound models. And at the output stage, assessing AI-produced content’s credibility and tracking it through system chains instigates fresh integrity concerns. Each tier exacerbates the challenges of its predecessor, ultimately unfolding in societal costs, such as reinforced prejudices and lessened autonomy.

Consider it akin to safeguarding a building. Simply locking a door isn’t adequate; you need reliable concrete foundations, robust framing, an enduring roof, secure dual-pane windows, and perhaps motion-sensing cameras. Similarly, digital security at every tier is vital for ensuring the entire system’s trustworthiness.

This layered comprehension of security grows crucial as AI systems burgeon in complexity and independence, especially with extensive language models (LLMs) and profound learning frameworks making pivotal decisions. We must authenticate the integrity of each tier during the assembly and deployment of digital configurations impacting human lives and societal results.

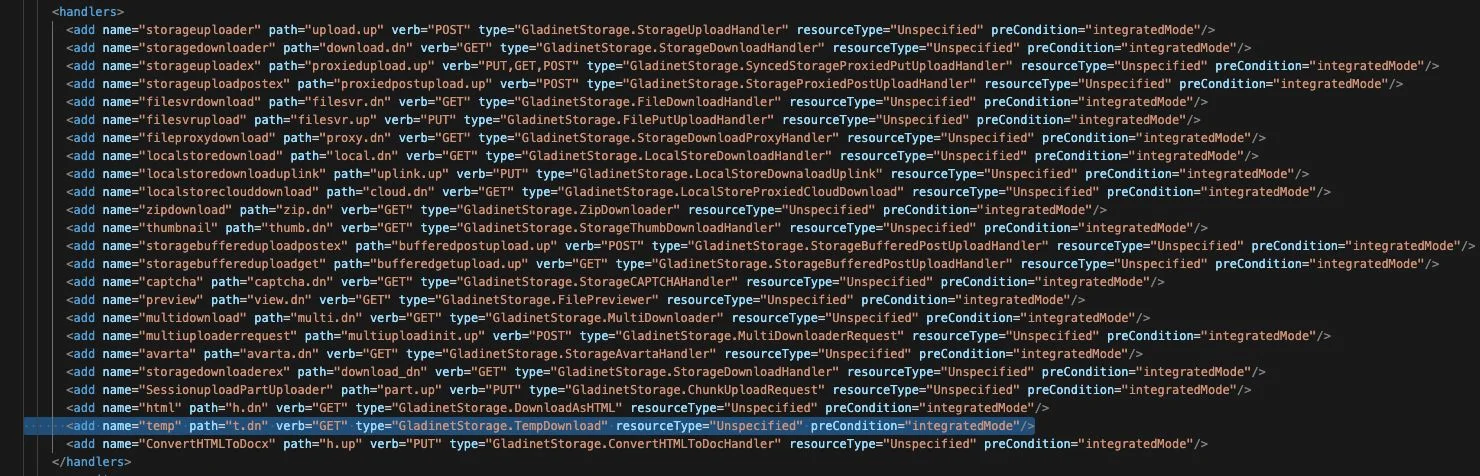

At the fundamental tier, bits reside in computer hardware. This marks the most fundamental encoding of our data, model weights, and computational directives. The subsequent stratum entails the file system structure: the organization of binary sequences into structured files and directories that a computer can effectively retrieve and process. In AI setups, this spans how we warehouse and organize training data, model checkpoints, and hyperparameter setups.

Succeeding that are the application tiers—the software and frameworks, like PyTorch and TensorFlow, enabling model training, data processing, and outcome generation. This tier manages the intricate math behind neural networks, gradient decline, and other ML activities.

Lastly, at the user interface tier, we encounter visualization and interaction systems—what humans visually perceive and engage with. For AI infrastructures, this could encompass anything from confidence scores and outcome probabilities to generated text and visual elements or self-governing robot actions.

Why does this tiered outlook matter? Vulnerabilities and authenticity dilemmas can materialize at any tier; thus, understanding these strata assists security specialists and AI researchers in conducting thorough threat delimitation. This facilitates the introduction of multilayered defense schemes—from cryptographic validation of training data to sturdy model structures to understandable outputs. This multi-tier security approach turns exceptionally crucial as AI systems delve into more autonomous decision-making realms in pivotal sectors like healthcare, finance, and public well-being. We must affirm integrity and dependability across all stack tiers.

The consequences of deploying AI sans exhaustive authenticity control actions are severe and often disregarded. When AI frameworks operate without adequate security measures to handle tainted or manipulated data, they might generate subtly flawed outcomes that appear valid superficially. These faults könnenErrors and prejudices can propagate across interconnected systems, magnifying their impact. If an AI system lacks adequate integrity controls, it may end up training on contaminated data, making decisions based on erroneous assumptions, or having its outputs tampered with undetected. The consequences of such scenarios can vary from reduced performance to disastrous breakdowns.

In the realm of Web 3.0, we identify four crucial areas where integrity plays a pivotal role. The first area is defined by granular access, enabling users and organizations to retain precise control over who can access and modify specific information and for what purposes. The second area pertains to authentication, extending beyond the basic “Who are you?” queries of today to ensure thorough validation and authorization of data access at all stages. Transparent data ownership constitutes the third crucial area, empowering data owners to monitor how and when their data is utilized and creating a traceable history of data lineage. Lastly, access standardization refers to the implementation of universal interfaces and protocols that facilitate consistent data access while upholding security measures.

Fortunately, we are not embarking on this journey from ground zero. Within this context, there exist open W3C protocols that address some of these concerns: decentralized identifiers for verifying digital identities, the verifiable credentials data model for articulating digital credentials, ActivityPub for decentralized social networking (as utilized by Mastodon), Solid for distributed data storage and retrieval, and WebAuthn for robust authentication standards. By offering standardized means to confirm data authenticity and ensuring data integrity across its lifespan, Web 3.0 establishes a trusted environment essential for the dependable operation of AI systems. This transformation in integrity control, placed in the hands of users, plays a critical role in maintaining the trustworthiness of data from creation and collection phases through processing and storage.

Integrity forms the bedrock of trust, operating on both technical and human levels. Looking ahead, integrity controls will be instrumental in shaping AI development, transitioning from optional functionalities to core architectural prerequisites, comparable to how SSL certificates progressed from being a luxury in banking circles to becoming a fundamental requirement for any web service.

Web 3.0 protocols possess the capability to embed integrity controls at their core, constructing a more dependable infrastructure for AI systems. At present, we tend to consider availability as a given; any downtime less than 100% for vital websites is deemed unacceptable. In the future, we will demand the same level of assurance for integrity. Achieving success in this realm will necessitate adherence to practical guidelines for upholding data integrity throughout the AI lifecycle—ranging from data collection and model training to deployment, usage, and evolution. These guidelines will not only encompass technical controls but also governance structures and human oversight, resembling the evolution of privacy policies from legal formalities into comprehensive frameworks for managing data responsibly. Through collaborative efforts within the industry and regulatory frameworks, the establishment of common standards and protocols will ensure consistent integrity controls across various AI systems and applications.

Just as the HTTPS protocol laid the groundwork for trusted e-commerce, the time is ripe for new integrity-centered standards to facilitate the emergence of trustworthy AI services in the future.

This collaborative essay was originally published in Communications of the ACM.