As per the U.S. Department of Justice (DoJ), it has taken control of two web domains and probed around 1,000 social media profiles used by Russian threat actors to clandestinely disseminate pro-Kremlin misinformation extensively within and outside the nation.

The DoJ directed a statement, linking the social media bot network to an elaborate propaganda campaign that involved utilizing AI-driven elements to generate fake social media profiles, purportedly belonging to U.S. citizens, to advance messages aligning with Russian government interests.

Reportedly, the network of bots, totaling 968 accounts on X, was an integral part of a sophisticated stratagem orchestrated by an RT employee, sponsored by the Kremlin, and supported by an FSB officer, who established and headed a covert intelligence outfit.

Beginning in April 2022, the developmental work on the bot farm was kickstarted by the acquisition of online infrastructure by the individuals behind the scheme, while concealing their identities and whereabouts. The primary aim, according to the DoJ, was to serve Russian agenda by spreading misinformation through fictitious online personas embodying various nationalities.

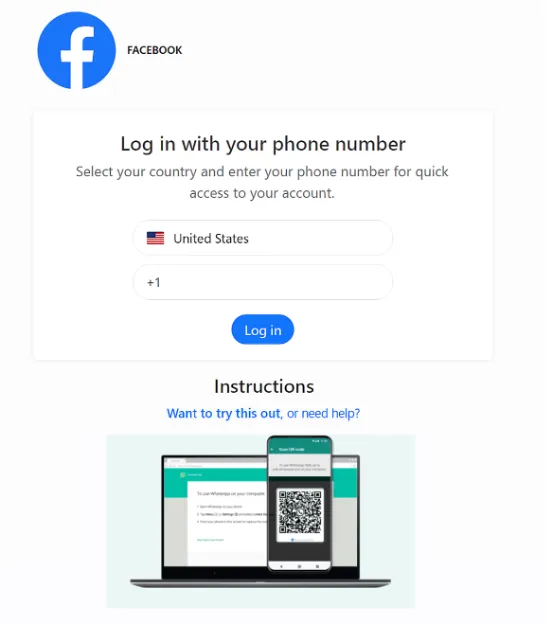

The fake social media accounts were registered through exclusive email servers linked to two domains – mlrtr[.]com and otanmail[.]com – obtained from the domain registrar Namecheap. X has since suspended these bot accounts due to breaches of its terms of service.

The disinformation campaign, which targeted countries like the U.S., Poland, Germany, the Netherlands, Spain, Ukraine, and Israel, depended on an AI-backed tool named Meliorator that enabled the mass creation and operation of the social media bot network.

“Using this software, RT affiliates broadcasted misinformation to multiple nations, including the U.S., Poland, Germany, the Netherlands, Spain, Ukraine, and Israel,” revealed law enforcement units from Canada, the Netherlands, and the U.S.

Meliorator consists of an admin interface named Brigadir and a backend utility named Taras, which manages the activity of the authentic-looking accounts. The profiles’ pictures and biographical data were crafted using an open-source tool known as Faker.

Each of these accounts exhibited a unique persona or “essence” based on one of the three bot archetypes: those propagating political views supportive of the Russian government, those amplifying content shared by other bots, and those perpetuating misinformation common among both bot and human-operated profiles.

Although the software was identified only on X, further scrutiny exposed the threat actors’ intentions to expand its reach to encompass other social media channels.

Moreover, the system managed to circumvent X’s verification protocols by automatically capturing one-time passcodes sent to the registered mail addresses and assigning proxy IP addresses to AI-generated profiles based on their assumed geographical location.

“Bot persona accounts deliberately avoid detection for terms of service breaches and camouflage themselves within the broader social media landscape to elude being identified as bots. Similar to genuine profiles, these bots follow real accounts aligned with their political inclinations and interests stated in their bio,” stated the agencies.

“Farming is a popular hobby for millions of Russian citizens,” RT conveyed to Bloomberg in response to the allegations, refraining from a direct denial.

This incident marks the first instance where the U.S. has publicly accused a foreign administration of deploying AI in an overseas influence campaign. While no public criminal charges have been issued thus far, investigations into the matter continue.

Doppelganger Persists

In recent periods, Google, Meta, and OpenAI have cautioned about Russian propaganda drives, such as those orchestrated by a group named Doppelganger, exploiting their platforms to disseminate pro-Russian narratives.

“The operation and its associated network and server infrastructure for content distribution remain active,” as stated in a fresh report by Qurium and EU DisinfoLab.

“Noteworthily, Doppelganger operates not from a concealed data center in a Vladivostok Fortress or a remote military Bat cave but from newly-established Russian providers functioning within Europe’s largest data centers. Doppelganger is closely aligned with cybercrime operations and affiliate ad networks.”

At the core of the operation lies a consortium of bulletproof hosting services coveringAeza, Tyrant Regime, GIR, and TNSECURITY have been known to shelter command-and-control domains for various malicious software families like Stealc, Amadey, Agent Tesla, Glupteba, Raccoon Stealer, RisePro, RedLine Stealer, RevengeRAT, Lumma, Meduza, and Mystic.

Furthermore, NewsGuard, a provider of various tools to combat misinformation, recently discovered that widely-used AI chatbots tend to repeat “manufactured narratives from state-associated sources posing as local news outlets in 33% of their replies.”

Influence Operations originating from Iran and China

The U.S. Office of the Director of National Intelligence (ODNI) indicated that Iran is “escalating their foreign influence initiatives, aiming to incite discord and erode trust in our democratic systems.”

It was also highlighted that Iranian entities are continuously enhancing their cyber and influence strategies, leveraging social media platforms and making threats, while portraying themselves as online activists to amplify pro-Gaza demonstrations in the U.S.

Google reported blocking more than 10,000 instances of Dragon Bridge (also known as Spamouflage Dragon) activity in the first quarter of 2024 – a term for a spammy yet persistent influential network connected to China, which was disseminating negative narratives about the U.S. and content related to the Taiwan elections and the Israel-Hamas conflict targeted at Chinese speakers on YouTube and Blogger.

In contrast, the technology behemoth disrupted a minimum of 50,000 such instances in 2022 and an additional 65,000 in 2023. In total, it has thwarted over 175,000 instances since the network’s inception.

“Despite the copious amount of content generation and the magnitude of their actions, DRAGONBRIDGE garners almost zero authentic engagement from legitimate viewers,” remarked Zak Butler, a researcher from the Threat Analysis Group (TAG), in a statement regarding the activity disruption by Google. “Instances where DRAGONBRIDGE content managed to attract engagement were overwhelmingly artificial, originating from other DRAGONBRIDGE accounts and not genuine users.”