The mandatory guardrails proposed by the Australian government aim to reduce AI risks and establish trust in the technology. These include testing AI models, ensuring human involvement, and granting individuals the ability to contest automated AI decisions among the 10 proposed guardrails.

In September 2024, Industry and Science Minister Ed Husic introduced these guardrails for public feedback. They might soon govern AI usage in high-risk environments. These proposals are accompanied by a new Voluntary AI Safety Criterion to encourage businesses to promptly adopt optimal AI practices.

List of the mandatory AI guardrails being proposed:

Designed to provide clear guidelines on safe and responsible AI utilization in high-risk scenarios, Australia’s 10 mandatory guardrails focus on addressing AI risks and fostering public trust while offering regulatory clarity to businesses.

Guardrail 1: Obligation

Organizations will be required to create, implement, and disclose accountability processes to ensure regulatory compliance. This includes establishing data and risk management policies and defining internal roles and duties.

Guardrail 2: Risk control

An established risk control process to identify and mitigate AI risks is essential. This should extend beyond technical risk assessments to consider potential impacts on individuals, communities, and society before deploying a high-risk AI system.

SEE: 9 creative use cases for AI in Australian enterprises in 2024

Guardrail 3: Data security

Organizations must secure AI systems to protect privacy with cybersecurity measures and implement robust data governance to oversee data quality and origins. The government noted that data quality directly influences an AI model’s performance and reliability.

Guardrail 4: Evaluation

Prior to market release, high-risk AI systems should undergo testing and evaluation. Continuous monitoring post-deployment is also necessary to ensure adherence to specific, objective, and measurable performance benchmarks, thereby minimizing risks.

Guardrail 5: Human supervision

High-risk AI systems must undergo meaningful human oversight. Organizations must ensure individuals can comprehend and supervise AI systems effectively, intervening where necessary throughout the AI lifecycle and supply chain.

Guardrail 6: User notification

Organizations must inform end-users if they are part of AI-driven decisions, interacting with AI, or consuming AI-generated content to clarify the impact of AI on them. Communication should be clear, accessible, and relevant.

Guardrail 7: Disputing AI

Individuals impacted negatively by AI systems should have the right to challenge their usage or outcomes. Organizations need to establish procedures for impacted individuals to contest AI decisions or lodge complaints regarding their experience or treatment due to high-risk AI systems.

Guardrail 8: Openness

Organizations must be open with the AI supply chain regarding data, models, and systems to help address risks efficiently. Lack of critical information among stakeholders on how a system functions may limit explainability, similar to current challenges with advanced AI models.

Guardrail 9: AI documentation

Organizations must maintain various records on AI systems throughout their lifecycle, including technical documentation. These records must be provided to relevant authorities upon request for compliance assessment with the guardrails.

SEE: Why generative AI projects face failure without business comprehension

Guardrail 10: AI audits

Organizations must undergo conformity audits, serving as accountability and quality assurance measures to demonstrate adherence to the guardrails for high-risk AI systems. These audits can be conducted by AI developers, third parties, or government bodies.

When and how will the 10 new obligatory guardrails be implemented?

The mandatory guardrails are under public consultation until Oct. 4, 2024.

Subsequently, the government will finalize and implement the guardrails, including the potential creation of a new Australian AI Act, according to Husic’s statements.

Other alternatives comprise:

- Incorporating the new guardrails into existing regulatory frameworks.

- Introducing framework legislation with amendments to current laws.

Husic expressed the government’s commitment to executing this promptly.we have.” The safety barriers have emerged from an extensive consultation process on AI governance that has been in progress since June 2023.

What is prompting the government to adopt its current regulatory approach?

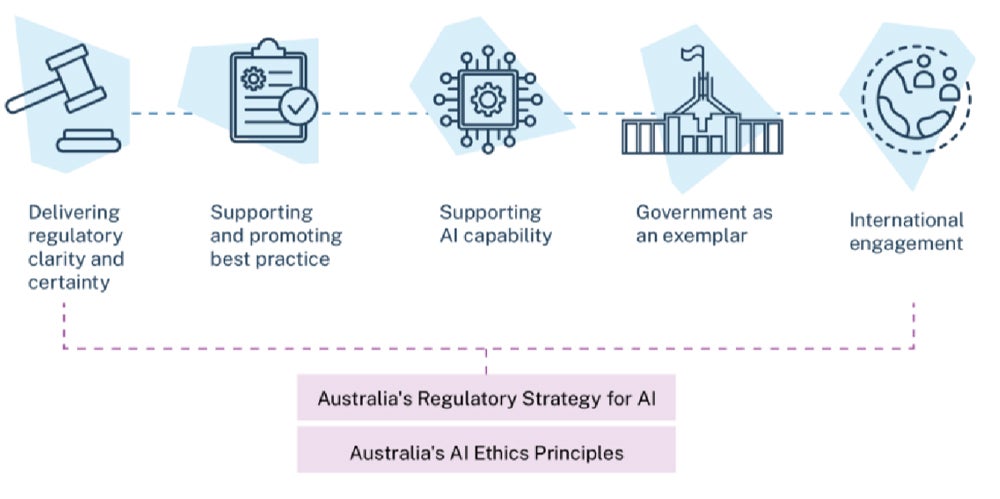

The Australian authorities are mirroring the EU by opting for a risk-oriented strategy for overseeing AI. This strategy aims to strike a balance between the potential advantages AI offers and its deployment in contexts where risks are high.

Emphasizing contexts of high risk

The precautionary measures advocated in the safety barriers aim to “prevent catastrophic harm before its occurrence,” as indicated by the government in its Safe and responsible AI in Australia proposals paper.

The government will outline the criteria for defining high-risk AI as part of the consultation process. However, it hints at considering scenarios such as adverse effects on an individual’s human rights, negative impacts on physical or mental well-being or safety, and legal implications like defamatory content, among other potential dangers.

Businesses require guidance on AI

The government argues that businesses need clear safety barriers to deploy AI securely and responsibly.

A recently published Responsible AI Index 2024, initiated by the National AI Centre, reveals that Australian businesses consistently overrate their capacity to implement responsible AI practices.

The survey results indicated:

- 78% of Australian firms believed they were employing AI safely and responsibly, whereas in reality, this was only true in 29% of cases.

- Australian organizations are adopting an average of only 12 out of 38 responsible AI practices.

What actions should businesses and IT teams take at present?

The mandatory safety barriers will impose new obligations on organizations using AI in high-risk contexts.

IT and security teams will likely be involved in fulfilling several of these requirements, including responsibilities related to data quality and security, and ensuring transparency of models throughout the supply chain.

The Voluntary AI Safety Standard

The government has introduced a Voluntary AI Safety Standard that is currently accessible to businesses.

IT teams keen on readiness can leverage the AI Safety Standard to help their businesses comply with requirements under potential legislation, which could encompass the new mandatory safety barriers.

The AI Safety Standard contains guidance on how businesses can implement and embrace the standard by using specific case studies, including a common scenario involving a general AI chatbot.