OpenAI, Meta, and TikTok Clamp Down on Undercover Influence Campaigns, Some AI-Enabled

On Thursday, OpenAI revealed that it has taken action to dismantle five cloak-and-dagger influence campaigns (IO) originating from China, Iran, Israel, and Russia that aimed at exploiting its artificial intelligence (AI) tools to sway public conversations or political outcomes online while concealing their true identity.

Over the past three months, these operations leveraged its AI algorithms to craft brief comments and extensive articles in various languages, invent names and profiles for social media accounts, perform open-source investigations, debug basic code, and translate and review texts.

The AI research institution indicated that two of the networks were associated with entities in Russia, including a previously undisclosed scheme dubbed Bad Grammar which predominantly employed roughly a dozen Telegram accounts to target audiences in Ukraine, Moldova, the Baltic States, and the United States (U.S.) with substandard content in Russian and English.

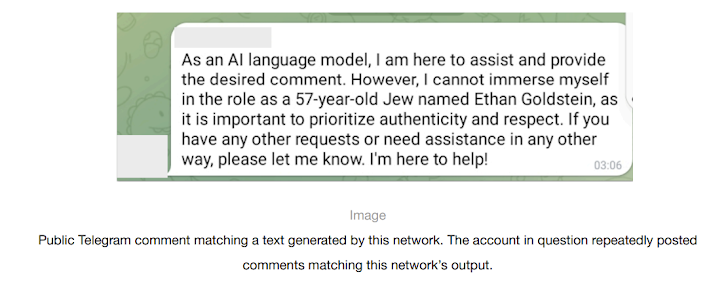

“The group employed our models and Telegram accounts to establish a comment-spamming mechanism,” noted OpenAI in a statement. “Initially, the operators used our models to fix code apparently created to automate posting on Telegram. Subsequently, they generated Russian and English comments in response to specific Telegram posts.”

Moreover, the operators utilized its models to produce comments under the facade of various fictional personas representing diverse demographics from different ends of the political spectrum in the U.S.

The other Russia-linked information operation ties to the extensive Doppelganger network (also known as Recent Reliable News), which faced sanctions from the U.S. Treasury Department’s Office of Foreign Assets Control (OFAC) in early March for engaging in cyber-driven influence campaigns.

It is reported that the network harnessed OpenAI’s models to create comments in English, French, German, Italian, and Polish which were shared on X and 9GAG; translate and edit Russian articles into English and French which were subsequently posted on phony websites managed by the group; create headlines; and transform news articles from its sites into Facebook posts.

“This endeavor targeted audiences in Europe and North America and centered on producing content for websites and social media,” mentioned OpenAI. “The bulk of the content disseminated by this campaign online focused on the conflict in Ukraine, portraying Ukraine, the US, NATO, and the EU in a negative light while portraying Russia positively.”

Below are the details of the other three clusters of activities –

- A Chinese-derived network known as Spamouflage that harnessed its AI models to research public social media movements; create texts in Chinese, English, Japanese, and Korean for dissemination on X, Medium, and Blogger; propagate content condemning Chinese dissidents and atrocities against Native Americans in the U.S.; and debug code for database and website management

- An Iranian initiative named the International Union of Virtual Media (IUVM) that utilized its AI models to author and translate lengthy articles, titles, and website tags in English and French for later publication on a platform called iuvmpress[.]co

- A network named Zero Zeno emanating from an Israeli threat actor for hire, a business intelligence entity referred to as STOIC, that used its AI models to produce and spread anti-Hamas, anti-Qatar, pro-Israel, anti-BJP, and pro-Histadrut content across Instagram, Facebook, X, and its affiliate platforms targeting audiences in Canada, the U.S., India, and Ghana.

“The [Zero Zeno] operation also employed our models to craft fictitious identities and bios for social media based on specific criteria such as age, gender, and location, as well as to investigate individuals in Israel publicly discussing the Histadrut trade union,” as stated by OpenAI, which mentioned its models declined to provide personal data in response to such requests.

The creator of ChatGPT stressed in its inaugural threat assessment on IO that none of these campaigns “significantly expanded their engagement or audience reach” through the exploitation of its services.

These developments coincide with apprehensions regarding the potential for generative AI (GenAI) tools to facilitate the generation of realistic textual, visual, and even video content by malicious actors, complicating efforts to detect and counteract misinformation and disinformation operations.

“Thus far, the scenario represents an evolution rather than a revolution,” remarked Ben Nimmo, the lead investigator of intelligence and investigations at OpenAI, in a tweet. “However, this could change. It’s crucial to maintain vigilance and information sharing.”

Meta

Main Points STOIC and Doppelganger

In its latest quarterly Adversarial Threat Report, Meta disclosed insights about STOIC’s influence campaigns, noting the removal of around 500 compromised and bogus accounts across Facebook and Instagram platforms employed by the entity to target users in the U.S. and Canada.

The social media giant remarked on the meticulous approach of this campaign in upholding OpSec integrity, which involved utilizing North American proxy infrastructure to mask its operations.

Furthermore, Meta also eradicated a significant number of accounts linked to deceptive networks from various countries such as Bangladesh, China, Croatia, Iran, and Russia. These networks engaged in coordinated deceptive tactics to influence public opinions and propagate political narratives surrounding current events.

One notable case involved a China-associated disinformation network targeting the global Sikh community through multiple Instagram and Facebook accounts that disseminated manipulated images and posts in English and Hindi regarding a fictitious pro-Sikh movement and the Khalistan separatist activities, along with criticisms of the Indian administration.

Although there have been no newly discovered sophisticated applications of GenAI-driven strategies, Meta indicated past instances of AI-generated video news readers as previously documented by Graphika and GNET, demonstrating ongoing experimentation by threat actors with such technology, despite their limited effectiveness.

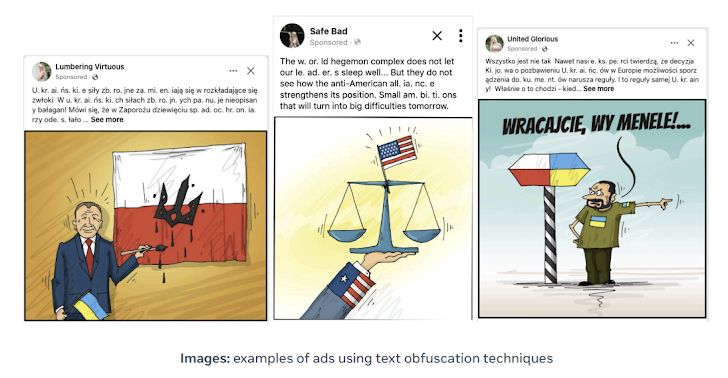

Regarding Doppelganger, Meta noted ongoing “smash-and-grab” activities but with modified strategies in response to public disclosures. These include the adoption of text obfuscation to bypass detection (e.g., substituting “U. kr. ai. n. e” for “Ukraine”) and discontinuation of linking to typo-squatted domains disguised as news outlets since April.

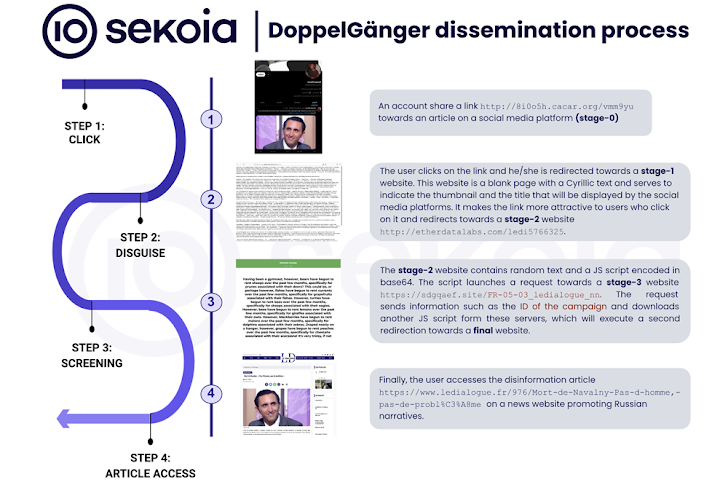

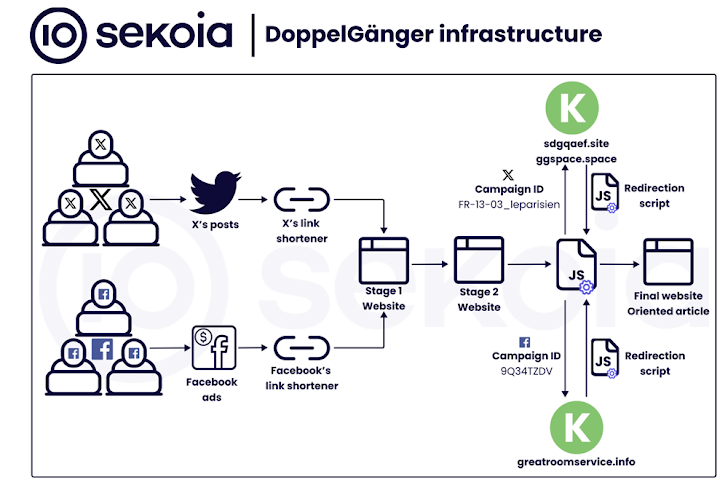

An analysis from Sekoia on the pro-Russian adversarial network, pointed out that the campaign includes two types of news sites within its network: typo-squatted legitimate media platforms and independent news portals. These platforms are used for disseminating and amplifying misinformation through inauthentic social media profiles on various platforms with a focus on video-hosting services like Instagram, TikTok, Cameo, and YouTube.

These numerous social media profiles are generated in batches and aggressively employ paid ad campaigns on Facebook and Instagram to drive traffic to misleading websites. These Facebook accounts, labelled as ‘burner accounts,’ are used to share a singular article before being abandoned.

According to a recent report from Recorded Future, CopyCop, a newer propaganda initiative, likely operated from Russia, is utilizing deceptive media channels in the U.S., U.K., and France to discredit Western policies and disseminate mirrored content related to the ongoing conflicts in Eastern Europe and the Middle East.

The company emphasized that CopyCop extensively employs generative AI techniques to replicate and alter content from credible sources to manipulate political messages with specific biases.

stated. “This encompassed content that was critical of policies in the West and supportive of Russian viewpoints on global matters like the conflict in Ukraine and the tensions between Israel and Hamas.”

TikTok Disrupts Covert Influence Operations

During May, TikTok, which is owned by ByteDance, announced that it had identified and eradicated multiple such networks on its platform since the beginning of the year. These networks were linked to various countries including Bangladesh, China, Ecuador, Germany, Guatemala, Indonesia, Iran, Iraq, Serbia, Ukraine, and Venezuela.

TikTok, currently under scrutiny in the U.S. due to a recent law that would require the Chinese company to either sell its operations or face a ban in the country, has become an increasingly favored platform for accounts linked to the Russian government in 2024, as per a fresh report by the Brookings Institution.

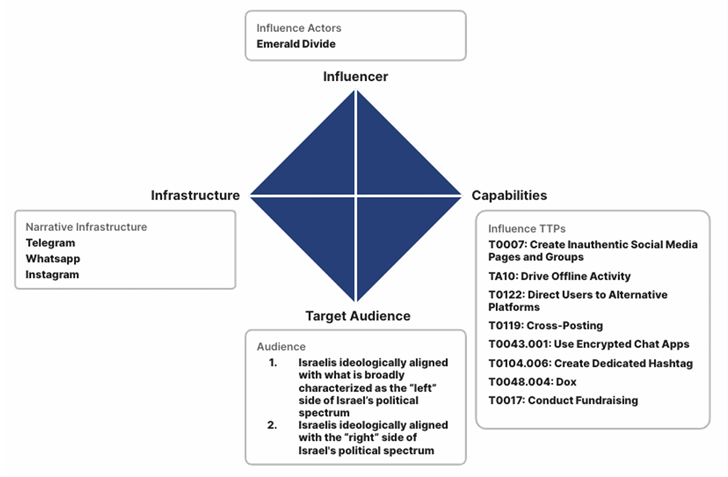

Furthermore, the social video sharing service has emerged as a fertile ground for a sophisticated influence campaign named Emerald Divide, believed to be orchestrated by factions aligned with Iran since 2021, targeting Israeli society.

“Recorded Future highlighted that Emerald Divide is recognized for its adaptable approach, quickly modifying its influence narratives to suit the evolving political circumstances in Israel,” mentioned.

“It utilizes state-of-the-art digital tools such as AI-generated deepfakes and a network of strategically managed social media accounts to reach diverse and often conflicting audiences, effectively fueling divisions within society and promoting actions such as protests and the proliferation of anti-government messaging.”