Wiz Research from the United States made public that they responsibly disclosed a DeepSeek database that was previously open to the public, exposing chat logs and other confidential information. DeepSeek took measures to secure the database, but this incident sheds light on potential risks associated with generative AI models, especially those of international scope.

Over the past week, DeepSeek has caused a stir in the tech sector as their AI models, produced by the Chinese company, have matched up against leading American generative AI technologies. In particular, DeepSeek’s R1 model is in direct competition with OpenAI o1 in certain benchmarks.

How did Wiz Research come across DeepSeek’s public database?

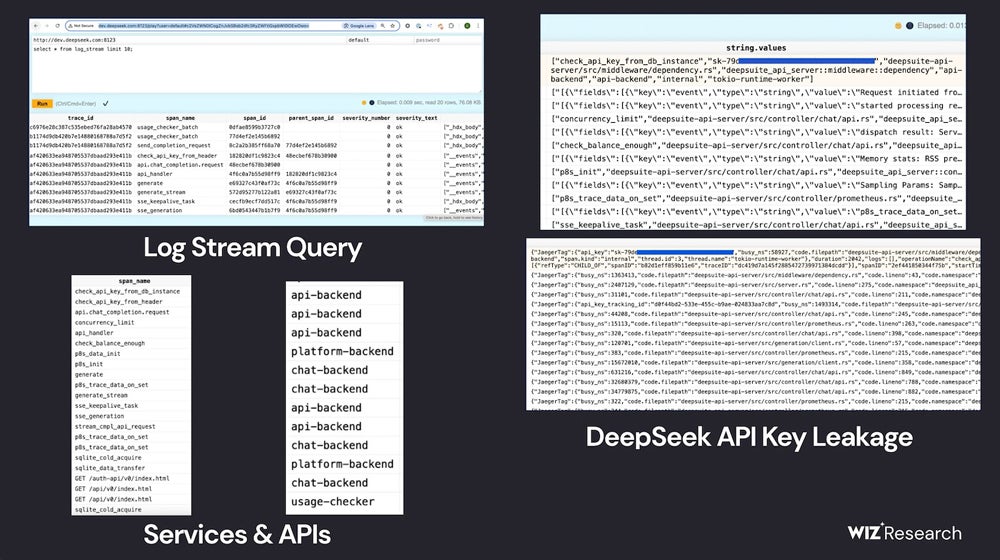

Gal Nagli, a cloud security researcher, detailed in a blog post the process through which Wiz Research stumbled upon a ClickHouse database, belonging to DeepSeek, that was easily accessible to the public. This open database presented potential vulnerabilities which could enable control of the database and facilitate privilege escalation attacks. Within the database, Wiz Research was able to view chat logs, backend information, log streams, API Secrets, and operational details.

The team quickly located the ClickHouse database as they evaluated potential weaknesses within DeepSeek’s infrastructure.

“The discovery left us stunned and spurred us into immediate action, given the significance of what we found,” Nagli conveyed via an email to TechRepublic.

Initially, the team assessed DeepSeek’s internet-facing subdomains, and two open ports stood out as unusual; these ports linked to DeepSeek’s ClickHouse-hosted database, which leveraged the open-source database management system. By exploring the tables within ClickHouse, Wiz Research uncovered chat histories, API keys, operational metadata, and more.

Wiz Research emphasized that they refrained from running intrusive queries during their investigation, aligning with ethical research norms.

What implications does the publicly accessible database hold for DeepSeek’s AI?

After notifying DeepSeek of the breach, the AI company swiftly secured the database, consequently averting any impacts on DeepSeek’s AI products.

Nonetheless, the fact that the database could have remained vulnerable to potential attackers underlines the challenges in safeguarding generative AI technologies.

“Whilst there is considerable focus on futuristic threats in AI security, the true danger often arises from fundamental risks—such as inadvertent exposure of databases to the public,” Nagli conveyed in a blog post.

IT professionals are encouraged to exercise caution when adopting new and unproven products, particularly those related to generative AI, allowing researchers adequate time to detect bugs and weaknesses within the systems. Where possible, it is advisable to integrate cautious timeframes into the company’s policies on the use of generative AI.

EXPLORE: The complexity of protecting and securing data in the age of generative AI has escalated.

“As companies rush to embrace AI tools and solutions from an increasing array of startups and providers, it is crucial to bear in mind that we are entrusting these entities with sensitive data,” Nagli noted.

Depending on the geographical location, members of IT teams may need to consider specific laws or security concerns that could be linked to generative AI models originating in China.

“For example, some elements of China’s historical context may not be fully or truthfully represented by these models,” highlighted Unmesh Kulkarni, who heads the gen AI division at the data science firm Tredence, in correspondence with TechRepublic. “The data privacy implications of utilizing the hosted model are also ambiguous, deterring most global companies from engaging in such practices. Nevertheless, it is worth noting that DeepSeek’s models are open-source, facilitating deployment within a company’s internal cloud or network environment, thus tackling concerns related to data privacy or leakage.”

Nagli also endorsed the use of self-hosted models when approached by TechRepublic via email.

“The implementation of strict access controls, encryption of data, and network segmentation can further diminish risks,” he articulated. “Companies should ensure they have oversight and governance across the entire AI infrastructure to assess all risks comprehensively, including the use of potentially malicious models, exposure of training data, sensitivity of data utilized in training, susceptibilities in AI SDKs, exposure of AI services, and other compound risks that malevolent entities might exploit.”