Apple sets the standard for cloud-based AI security

Next week, Apple will unveil new Macs and the initial services from its Apple Intelligence suite.

Next week, Apple will unveil new Macs and the initial services from its Apple Intelligence suite. To ensure the security of cloud-based requests through Apple Intelligence, it has implemented exceptional security measures and transparent privacy protocols for cloud-based requests managed by its Private Cloud Compute (PCC) system.

This signifies that Apple has surged ahead of the industry in its efforts to build a robust level of protection for security and privacy regarding the AI requests made through Apple’s cloud. This groundbreaking move is already impressing security experts.

What does this mean? Apple has taken a significant step to fortify its Private Cloud Compute system-wide by opening it up to security testers. This collaborative approach with the infosec community aims to fortify a defense mechanism to safeguard the future of AI.

Make no mistake, this is of paramount importance.

As AI is set to pervade all aspects of our lives, we are faced with a crucial decision between a future dominated by surveillance on an unprecedented scale or the potential of powerful machine/human enhancements. Server-based AI lays the foundation for both scenarios. Additionally, as quantum computing looms closer, the data collected by non-private AI systems could be exploited and weaponized in unimaginable ways.

So, to secure tomorrow, we need to take action today.

Securing AI in the cloud

Part of safeguarding this future and ensuring that Apple Intelligence stands as the most private and secure form of AI globally is the primary focus of PCC. This system enables Apple to execute generative AI (genAI) models that require more processing power than what an iPad, iPhone, or Mac can provide. It acts as the central hub for these AI requests and is purposefully designed with privacy and security in mind. Craig Federighi, Apple’s Senior Vice President of Software Engineering, emphasized the importance of privacy when introducing PCC at WWDC.

Apple has pledged to “establish public trust” in its cloud-based AI systems by allowing security and privacy experts to scrutinize and verify the end-to-end security and privacy protocols of the system. The security community is particularly excited because Apple has not only fulfilled this promise but gone above and beyond by disclosing all resources available to researchers.

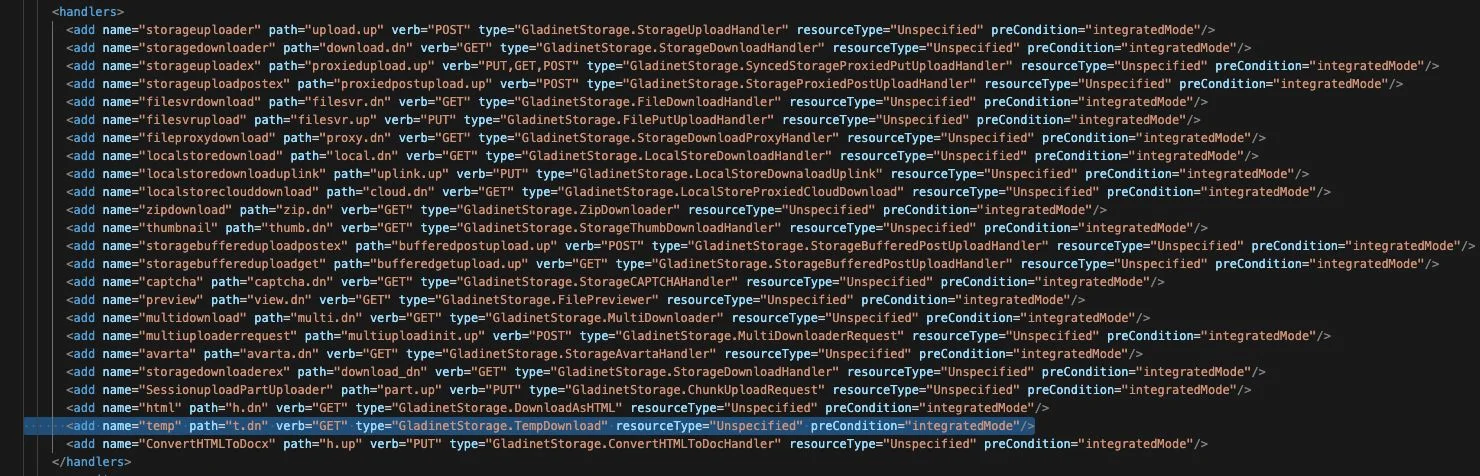

Resources provided include:

The PCC Security Guide

Apple has released the PCC Security Guide, an extensive 100-page document detailing the technical aspects of the system’s components and how they collaborate to secure AI processing in the cloud. This comprehensive guide delves into built-in hardware protections and the system’s response to various attack scenarios.

A Virtual Research Environment

Moreover, Apple has designed a Virtual Research Environment (VRE) for its platform, providing an array of tools for security researchers. With this environment, researchers can conduct their security analyses of PCC using a Mac. It offers a robust testing ground where a PCC node runs in a VM, enabling thorough testing for security and privacy vulnerabilities.

These tools allow you to:

Explore and review PCC software releases.

Validate the transparency log.

Retrieve the binaries for each release.

Launch a release in a virtualized setting.

Conduct inferences on demonstration models.

Modify and debug the PCC software for deeper analysis.

Release of PCC source code

Apple has also made a significant move by releasing the PCC source code, under a license agreement, permitting researchers to conduct in-depth examinations for vulnerabilities. The source code includes components related to privacy, validation, and logging. It is now available on GitHub.

Bounty Program

Acknowledging the importance of incentivizing researchers, Apple has instituted a bounty program for identifying vulnerabilities in the PCC code. Researchers can potentially earn up to $1 million for discovering a flaw that allows arbitrary code execution with arbitrary entitlements or $250,000 for uncovering a method to access a user’s request data or sensitive information related to their requests.

There are various other categories, and Apple is dedicated to encouraging researchers, irrespective of the significance of their discoveries. In cases where a vulnerability poses a substantial risk to PCC, Apple is prepared to reward researchers generously. The company emphasizes that the highest bounties will be reserved for vulnerabilities that compromise user data and inference request data.

Apple’s initiative

It is crucial to highlight that in striving for unparalleled transparency in the industry, Apple is taking a calculated risk to ensure that any existing weaknesses in its system are identified and disclosed rather than being exploited or concealed. The premise behind this is that while state-sponsored attackers may possess resources enabling them to gain insight into Apple’s security measures, they are unlikely to share vulnerabilities with the company. By opening up the system to a wider pool of security researchers, Apple contends that more vulnerabilities will be discovered simultaneously, enhancing its capacity to patch them more swiftly.

Apple believes that by revealing these details, it can alter the dynamics of security. Paradoxically, providing access to these security measures may bolster their efficacy and make them more impervious to attacks.

This represents Apple’s vision for the future of security in cloud-based AI processing. The company hopes to collaborate with the research community to bolster trust in the system while continuously enhancing its security and privacy aspects.

Significance of this development

This marks a pivotal moment for AI security. Apple, as an industry front-runner, sets a new standard through its actions. By setting this benchmark, Apple has redefined the level of transparency that should be expected from all companies offering cloud-based AI services. If Apple can do it, others can too. This move compels any organization or individual whose data or requests are handled by cloud-based AI systems to demand a comparable degree of transparency and protection. Apple is once again leading the way.

Don’t forget to connect with me on LinkedIn, Mastodon, or join the AppleHolic’s bar & grill group on MeWe.