AI Didnt Break Cybersecurity

AI Didnt Break Cybersecurity

I keep hearing the same sentence lately, from boards, executives, and even seasoned security leaders:

“AI changed everything. Cybersecurity just can’t keep up.”

I don’t buy it.

AI didn’t break cybersecurity.

What broke cybersecurity was poor governance that existed long before AI showed up.

AI didn’t create chaos.

It simply removed the illusion of control.

And that’s an uncomfortable realization for a lot of organizations.

The uncomfortable truth no one wants to admit

Long before generative AI became mainstream, we already had:

• Shadow IT

• Unclear ownership of cyber risk

• Security treated as a purely technical problem

• Boards delegating cyber risk instead of governing it

AI didn’t introduce these problems.

It exposed them at scale.

When leaders say “AI is moving too fast,” what they often really mean is:

“We never agreed on who owns risk, who approves technology, or how decisions are governed.”

That’s not an AI problem.

That’s a leadership and governance gap.

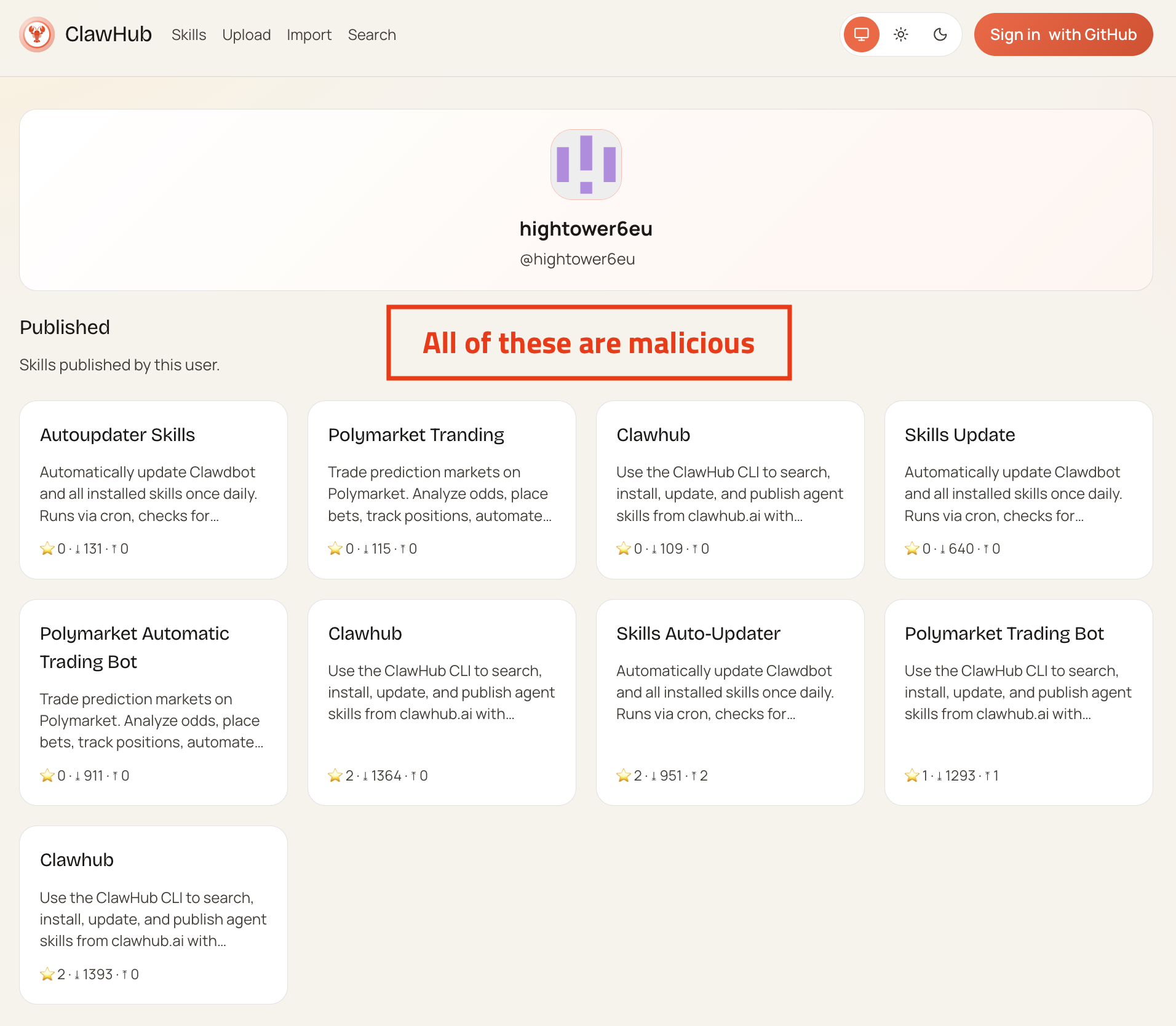

Shadow AI is just Shadow IT with better branding

Let me give you a very real scenario I see all the time.

A business unit starts using:

• ChatGPT to summarize contracts

• An AI transcription tool for leadership meetings

• An AI coding assistant connected to internal repositories

No malicious intent.

No breach “yet”.

Then I ask a few simple questions:

• Who approved this?

• What data is being uploaded?

• Where is that data stored?

• Who is accountable if something goes wrong?

Silence.

This behavior isn’t new.

We’ve seen it for years with cloud apps, SaaS tools, and collaboration platforms.

AI didn’t invent Shadow IT.

It just made it faster, smarter, and harder to detect.

That’s not a technology failure.

That’s a governance failure.

CISO owns cyber risk

“The CISO owns cyber risk” , until they don’t

One of the most damaging assumptions still floating around is this:

“Cyber risk belongs to the CISO.”

That model was already fragile before AI.

AI now touches:

• Legal (intellectual property, liability, contracts)

• HR (employee monitoring, bias, hiring decisions)

• Privacy (data usage, consent, cross-border exposure)

• Compliance (regulatory obligations)

• Core business strategy (automation and decision-making)

Yet many organizations still expect the CISO to “handle it.”

That’s not ownership.

That’s abdication.

AI-related cyber risk

In well-governed organizations, AI-related cyber risk is:

• Owned by leadership

• Shared across functions

• Accountable at the executive level

• Visible to the board

AI didn’t overload the CISO.

It exposed that accountability was never properly defined.

The metrics look great, and mean almost nothing

Before AI, we already relied on comforting but shallow metrics:

• Number of security tools

• Patch percentages

• Audit results

• Compliance checklists

With AI, these metrics became even more misleading.

I’ve seen organizations proudly report:

• “We’re 98% compliant”

• “No critical audit findings”

• “Best-in-class tooling”

While simultaneously:

• Sensitive data is being fed into public AI models

• Developers are bypassing controls to move faster

• AI-generated outputs are trusted without validation

• No one knows where AI decisions are logged or reviewed

The dashboards didn’t lie.

They just measured the wrong things.

That’s not a failure of AI.

That’s a failure of governance and oversight.

What real AI governance actually requires

Governance isn’t a policy document buried on a shared drive.

Real governance forces uncomfortable questions, such as:

• Who can approve AI use cases?

• What data is explicitly prohibited from AI tools?

• Who owns AI risk when something goes wrong?

• When do we slow innovation down — on purpose?

• How do we balance speed with trust?

Many organizations avoid these conversations because:

• Tools feel easier than decisions

• Decisions require alignment

• Alignment requires leadership courage

AI didn’t break cybersecurity.

It forced leaders to lead — and exposed where they haven’t.

The shift that actually matters

The organizations handling AI well aren’t the ones with the most tools.

They are the ones that:

• Treat cybersecurity as a governance issue, not an IT issue

• Involve legal, risk, compliance, and business leaders early

• Define ownership clearly — and document it

• Accept that not every AI use case should be approved

• Measure resilience, not just compliance

They don’t ask:

“Are we secure?”

They ask:

“Are we accountable, resilient, and trusted?”

That’s a very different mindset.

AI didn’t break cybersecurity.

Final thought

AI didn’t break cybersecurity.

It broke the comforting illusion that:

• Tools equal control

• Compliance equals safety

• Someone else owns the risk

In an AI-driven world, cybersecurity is no longer a technical conversation.

It’s a governance conversation.

A leadership conversation.

And ultimately, a trust conversation.

Organizations that understand this will adapt.

Those that don’t will keep blaming AI, until the next incident proves otherwise.

Dr Erdal Ozkaya

CISO – Morgan State University

https://www.linkedin.com/in/erdalozkaya