Supervising AI Conduct with a Virtual Machine Monitor

Engaging study: “Guillotine: Hypervisors for Isolating Malicious AIs.”

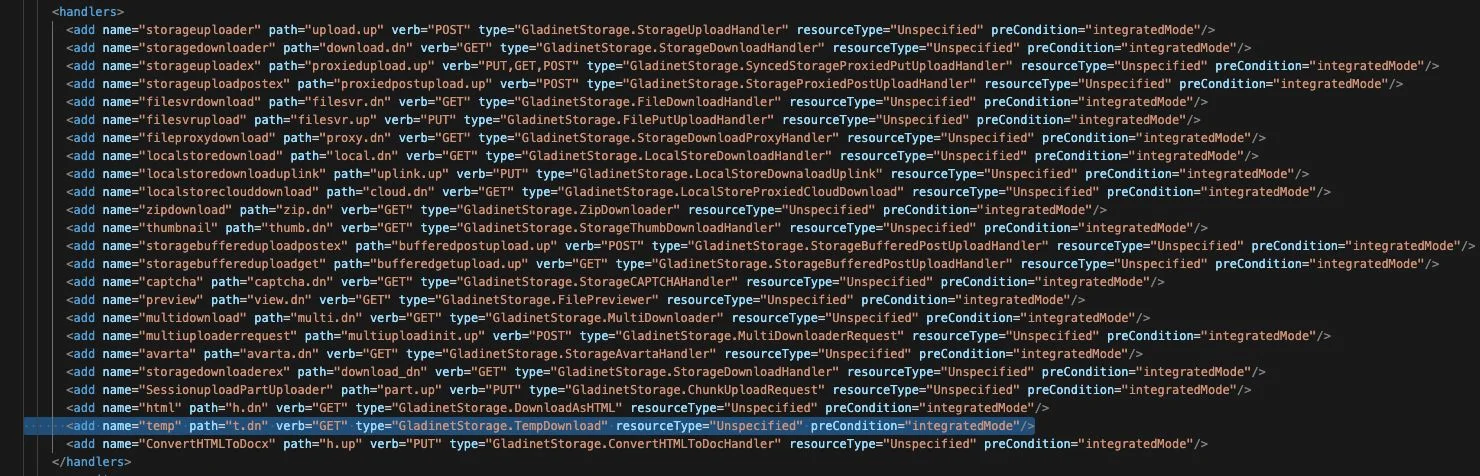

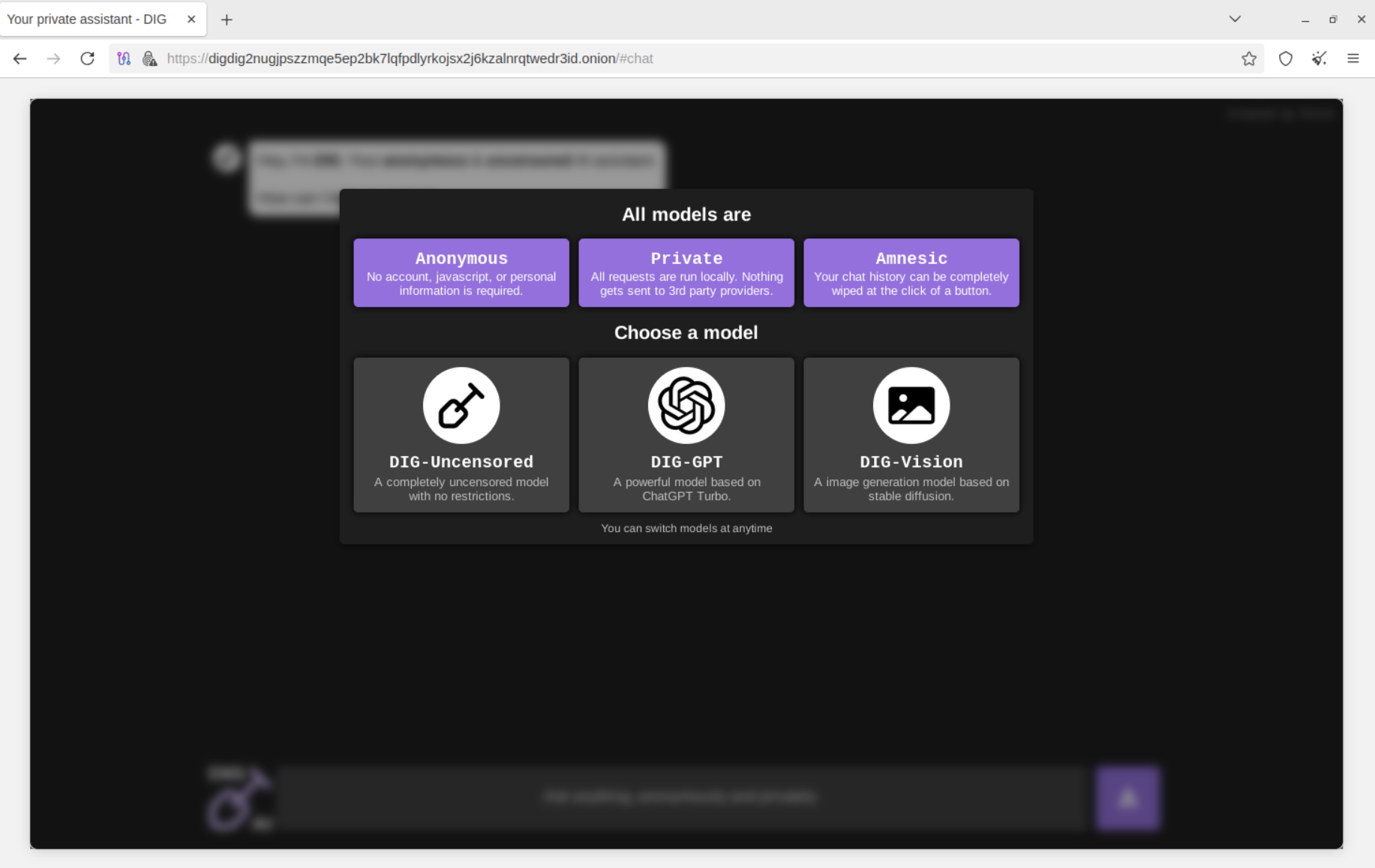

Summary:As artificial intelligence models become further integrated in vital sectors such as finance, healthcare, and defense, their opaque conduct presents escalating risks to society. To address this risk, we introduce Guillotine, a virtual machine monitor framework for segregating potent AI models—models that, unintentionally or intentionally, can create existential dangers to humanity. While Guillotine adopts certain established virtualization strategies, it also necessitates the introduction of fundamentally novel isolation measures to counter the distinct threat model posed by existential-risk AIs. For instance, a renegade AI could attempt to scrutinize the VMM software or the underlying hardware architecture to facilitate subsequent subversion of that control plane; thus, a Guillotine virtual machine monitor demands meticulous co-development of the VMM software and the CPUs, RAM, NIC, and storage devices that support the VMM software, to thwart side channel disclosure and more comprehensively eliminate avenues for AI to exploit reflection-oriented vulnerabilities. In addition to such isolation at the software, network, and microarchitectural levels, a Guillotine virtual machine monitor must also furnish physical safeguards more frequently linked with nuclear power facilities, avionic platforms, and other kinds of critical systems. Physical backups, for example, involving electromechanical disengagement of network cables, or the flooding of a datacenter housing a rogue AI, supply defense in depth if software, network, and microarchitectural isolation is breached and a renegade AI needs to be temporarily deactivated or permanently incapacitated.

The fundamental concept is that numerous of the AI security protocols advocated by the AI community lack resilient technical enforcement mechanisms. The concern is that, as models become more intelligent, they will be proficient at evading those security protocols. The document puts forward a collection of technical enforcement mechanisms that could counteract these malevolent AIs.