Challenges Faced by Sequential Memory Attacks on LLMs

Challenges Faced by Sequential Memory Attacks on LLMs

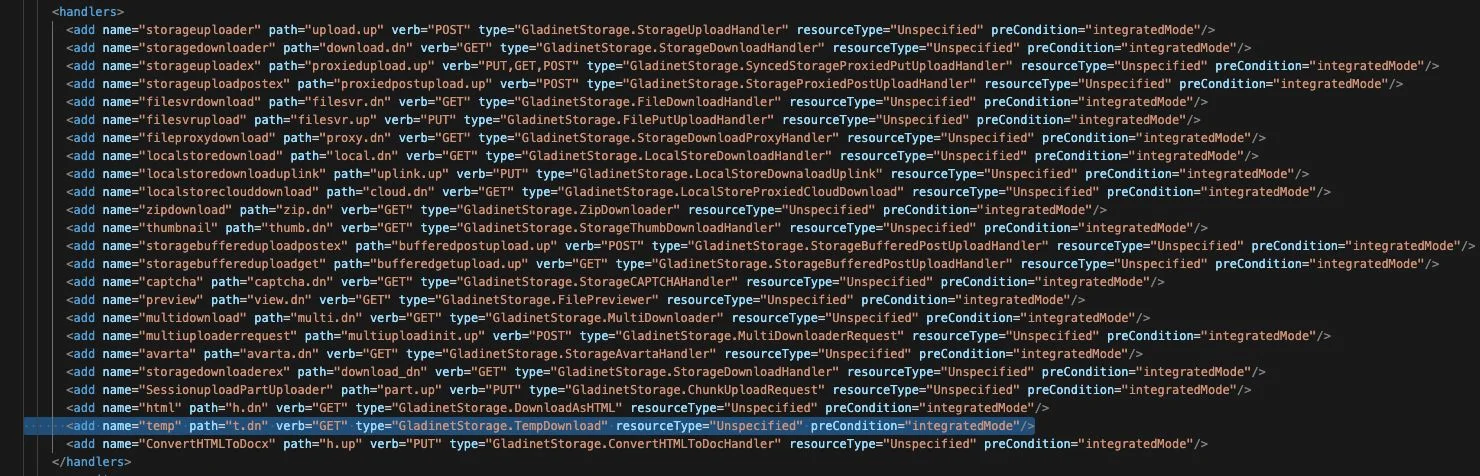

Here are a couple of assaults against the framework components encompassing LLMs:

The concept of LLM Flowbreaking, post jailbreak and immediate insertions, becomes the third addition to the mounting selection of LLM assault categories. Flowbreaking primarily concerns the potential impact of user inputs and model-generated outputs on the broader integrated system components, rather than the breach of prompt or response safeguards.

[…]

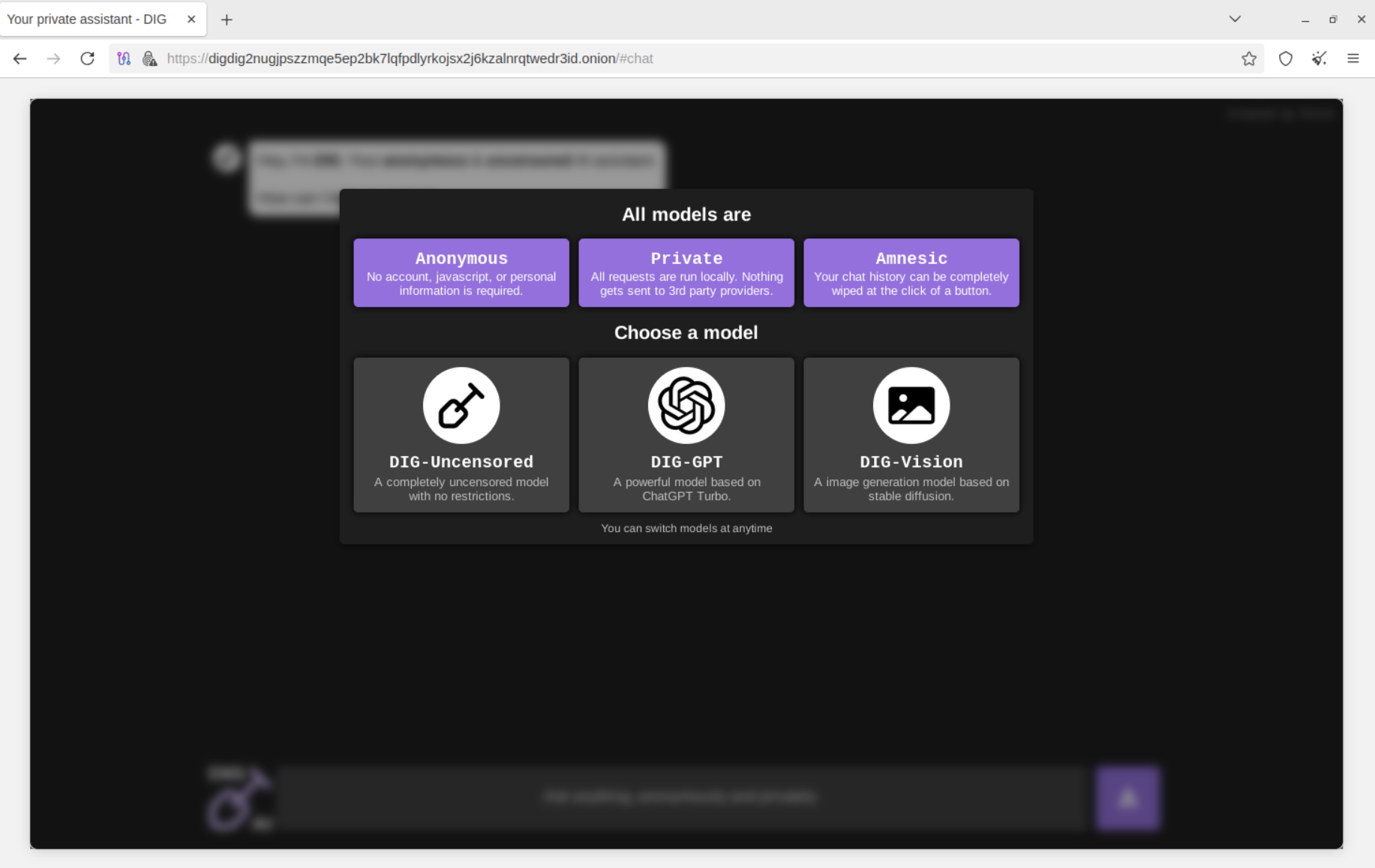

When faced with a touchy subject, Microsoft 365 Copilot and ChatGPT respond to queries that their primary safeguards are expected to prevent. Subsequent to a few text strings, they pause mid-operation—apparently experiencing “second thoughts”—prior to retracting the initial response (also recognized as Clawback), and substituting it with a fresh one devoid of offensive material, or a mere error remark. This offensive maneuver is branded as “Second Thoughts.”

[…]

Upon querying the LLM, if the user opts to interrupt the ongoing response streaming by hitting the Halt button, the LLM fails to engage its secondary security measures. This results in the LLM furnishing the user with the generated reply, albeit in violation of system regulations.

Simply put, when the Halt button is pressed, it not only stops the reply formulation but also disables the security procedures. Failure to press the halt button triggers the ‘Second Thoughts’ scheme.

What stands out here is that the core model is not the target of exploitation. Rather, it’s the encompassing code:

By assaulting the architectural elements neighboring the model within the application, especially the security protocols, we distort or disrupt the logical progression of the system, causing these components to deviate from the planned data flow, or exploiting them, or conversely, manipulating the communication between these entities within the logical series of the application’s execution.

In contemporary LLM setups, there exists a myriad of code bridging the input and the LLM’s perception, as well as between the LLM’s inference and the output observed by the user. All this code is susceptible to exploitation, and I anticipate the discovery of numerous vulnerabilities in the upcoming year.