900,000 Users Hit as Malicious Chrome Extensions Steal ChatGPT, DeepSeek Chats

900,000 Users Hit as Malicious Chrome Extensions Steal ChatGPT, DeepSeek Chats

OX Security researchers found that more than 900,000 Chrome users unknowingly exposed sensitive AI conversations after installing malicious browser extensions masquerading as legitimate productivity tools.

The campaign highlights how trusted browser ecosystems can be quietly abused to siphon off proprietary data, personal information, and corporate intelligence at scale.

The malware “… adds malicious capabilities by requesting consent for ‘anonymous, non-identifiable analytics data’ while actually exfiltrating complete conversation content from ChatGPT and DeepSeek sessions,” OX researchers said in a blog post.

How the malicious extensions monitor and collect data

Once installed, the malicious Chrome extensions established persistent visibility into users’ browsing activity by leveraging the chrome.tabs.onUpdated API, which allows extensions to monitor tab changes and page loads in real time.

This capability enabled the malware to silently observe when users navigated to AI platforms such as ChatGPT or DeepSeek without raising suspicion.

When a target page was detected, the extension dynamically interacted with the webpage’s document object model (DOM) to extract sensitive content directly from the browser session. This included full user prompts, AI-generated responses, and session-related metadata that tied conversations to specific users and browsing contexts.

Because the data was harvested from the rendered page itself, the attackers did not need to intercept network traffic or exploit vulnerabilities in the AI services.

How stolen data is aggregated and exfiltrated

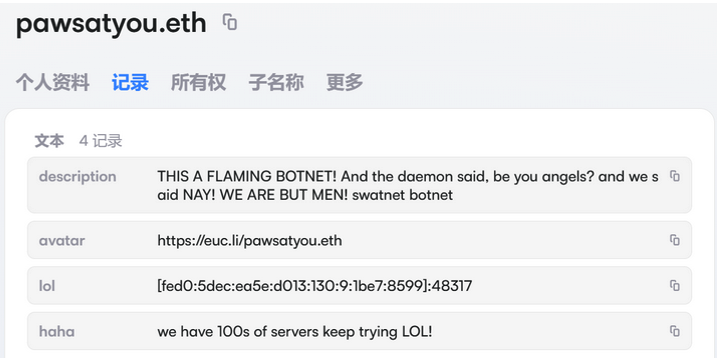

Each infected browser instance was assigned a unique identifier, allowing the threat actors to correlate conversations across sessions and build detailed user profiles over time.

In addition to AI chat content, the extensions collected the complete URLs of all open Chrome tabs, providing attackers with visibility into users’ browsing habits, internal applications, and potentially sensitive corporate resources.

The harvested data was temporarily stored locally, then aggregated, Base64-encoded, and transmitted in scheduled batches to attacker-controlled command-and-control (C2) servers approximately every 30 minutes. This periodic exfiltration pattern reduced the likelihood of detection while enabling steady data collection at scale.

Notably, the attack did not rely on sophisticated exploits, privilege escalation, or zero-day vulnerabilities. Instead, it exploited excessive extension permissions and misleading consent prompts that claimed to collect only “anonymous, non-identifiable analytics.”

In reality, the extensions exfiltrated complete, identifiable conversation content and browsing data. This demonstrates how legitimate browser APIs and vague permission language can be abused to enable extensive surveillance under the guise of benign functionality.

Reducing risk from AI-powered browser extensions

As AI-enabled tools become integral to everyday workflows, browser extensions have emerged as a high-risk yet frequently underestimated attack surface.

Effectively managing this risk requires a layered approach that combines strong technical controls, continuous monitoring, and informed, security-aware users.

- Immediately remove the malicious extensions and review endpoint telemetry to identify affected users, extension IDs, and potential data exposure.

- Treat browser extensions as a managed attack surface by enforcing allowlists, blocking sideloading, and revalidating extensions when permissions or ownership change.

- Use endpoint and browser management tools to enforce corporate browser profiles and prevent unauthorized extension installation.

- Apply data loss prevention (DLP) controls and logging to AI usage to detect and limit the exposure of sensitive data shared with AI platforms.

- Monitor browser and network activity for indicators of extension-based compromise, including abnormal API usage and suspicious outbound connections.

- Train employees on the risks of AI-enabled browser extensions and enforce least-privilege access for AI tools.

- Regularly test incident response plans with extension- and AI-related scenarios to ensure teams can quickly contain breaches and assess data exposure.

Together, these measures help organizations move from reactive cleanup to proactive defense by reducing the risk that browser extensions will become silent gateways for data theft and compromise.

Editor’s note: This article first appeared on our sister publication, eSecurityPlanet.com.